Cheaha GettingStarted: Difference between revisions

| Line 509: | Line 509: | ||

Autoconf/2.69-GCC-4.8.4 HarfBuzz/1.2.7-intel-2016a ncurses/5.9-GNU-4.9.3-2.25 | Autoconf/2.69-GCC-4.8.4 HarfBuzz/1.2.7-intel-2016a ncurses/5.9-GNU-4.9.3-2.25 | ||

Autoconf/2.69-GNU-4.9.3-2.25 HDF5/1.8.15-patch1-intel-2015b ncurses/5.9-goolf-1.4.10 | Autoconf/2.69-GNU-4.9.3-2.25 HDF5/1.8.15-patch1-intel-2015b ncurses/5.9-goolf-1.4.10 | ||

. | |||

. | |||

. | |||

. | |||

</pre> | </pre> | ||

Revision as of 18:08, 27 September 2016

Cheaha is a cluster computing environment for UAB researchers.Information about the history and future plans for Cheaha is available on the cheaha page.

Access (Cluster Account Request)

To request an account on Cheaha, please submit an authorization request to the IT Research Computing staff. Please include some background information about the work you plan on doing on the cluster and the group you work with, ie. your lab or affiliation.

Usage of Cheaha is governed by UAB's Acceptable Use Policy (AUP) for computer resources.

The official DNS name of Cheaha's frontend machine is cheaha.rc.uab.edu. If you want to refer to the machine as cheaha, you'll have to either add the "rc.uab.edu" to you computer's DNS search path. On Unix-derived systems (Linux, Mac) you can edit your computers /etc/resolv.conf as follows (you'll need administrator access to edit this file)

search rc.uab.edu

Or you can customize your SSH configuration to use the short name "cheaha" as a connection name. On systems using OpenSSH you can add the following to your ~/.ssh/config file

Host cheaha Hostname cheaha.rc.uab.edu

Login

Overview

Once your account has been created, you'll receive an email containing your user ID, generally your Blazer ID. Logging into Cheaha requires an SSH client. Most UAB Windows workstations already have an SSH client installed, possibly named SSH Secure Shell Client or PuTTY. Linux and Mac OS X systems should have an SSH client installed by default.

Usage of Cheaha is governed by UAB's Acceptable Use Policy (AUP) for computer resources.

Client Configuration

This section will cover steps to configure Windows, Linux and Mac OS X clients to connect to Cheaha.

Linux

Linux systems, regardless of the flavor (RedHat, SuSE, Ubuntu, etc...), should already have an SSH client on the system as part of the default install.

- Start a terminal (on RedHat click Applications -> Accessories -> Terminal, on Ubuntu Ctrl+Alt+T)

- At the prompt, enter the following command to connect to Cheaha (Replace blazerid with your Cheaha userid)

ssh blazerid@cheaha.rc.uab.edu

Mac OS X

Mac OS X is a Unix operating system (BSD) and has a built in ssh client.

- Start a terminal (click Finder, type Terminal and double click on Terminal under the Applications category)

- At the prompt, enter the following command to connect to Cheaha (Replace blazerid with your Cheaha userid)

ssh blazerid@cheaha.rc.uab.edu

Windows

There are many SSH clients available for Windows, some commercial and some that are free (GPL). This section will cover two clients that are commonly found on UAB Windows systems.

MobaXterm

MobaXterm is a free (also available for a price in a Profession version) suite of SSH tools. Of the Windows clients we've used, MobaXterm is the easiest to use and feature complete. Features include (but not limited to):

- SSH client (in a handy web browser like tabbed interface)

- Embedded Cygwin (which allows Windows users to run many Linux commands like grep, rsync, sed)

- Remote file system browser (graphical SFTP)

- X11 forwarding for remotely displaying graphical content from Cheaha

- Installs without requiring Windows Administrator rights

Start MobaXterm and click the Session toolbar button (top left). Click SSH for the session type, enter the following information and click OK. Once finished, double click cheaha.uabgrid.uab.edu in the list of Saved sessions under PuTTY sessions:

| Field | Cheaha Settings |

|---|---|

| Remote host | cheaha.rc.uab.edu |

| Port | 22 |

PuTTY

PuTTY is a free suite of SSH and telnet tools written and maintained by Simon Tatham. PuTTY supports SSH, secure FTP (SFTP), and X forwarding (XTERM) among other tools.

- Start PuTTY (Click START -> All Programs -> PuTTY -> PuTTY). The 'PuTTY Configuration' window will open

- Use these settings for each of the clusters that you would like to configure

| Field | Cheaha Settings |

|---|---|

| Host Name (or IP address) | cheaha.rc.uab.edu |

| Port | 22 |

| Protocol | SSH |

| Saved Sessions | cheaha.rc.uab.edu |

- Click Save to save the configuration, repeat the previous steps for the other clusters

- The next time you start PuTTY, simply double click on the cluster name under the 'Saved Sessions' list

SSH Secure Shell Client

SSH Secure Shell is a commercial application that is installed on many Windows workstations on campus and can be configured as follows:

- Start the program (Click START -> All Programs -> SSH Secure Shell -> Secure Shell Client). The 'default - SSH Secure Shell' window will open

- Click File -> Profiles -> Add Profile to open the 'Add Profile' window

- Type in the name of the cluster (for example: cheaha) in the field and click 'Add to Profiles'

- Click File -> Profiles -> Edit Profiles to open the 'Profiles' window

- Single click on your new profile name

- Use these settings for the clusters

| Field | Cheaha Settings |

|---|---|

| Host name | cheaha.rc.uab.edu |

| User name | blazerid (insert your blazerid here) |

| Port | 22 |

| Protocol | SSH |

| Encryption algorithm | <Default> |

| MAC algorithm | <Default> |

| Compression | <None> |

| Terminal answerback | vt100 |

- Leave 'Connect through firewall' and 'Request tunnels only' unchecked

- Click OK to save the configuration, repeat the previous steps for the other clusters

- The next time you start SSH Secure Shell, click 'Profiles' and click the cluster name

Logging in to Cheaha

No matter which client you use to connect to the Cheaha, the first time you connect, the SSH client should display a message asking if you would like to import the hosts public key. Answer Yes to this question.

- Connect to Cheaha using one of the methods listed above

- Answer Yes to import the cluster's public key

- Enter your BlazerID password

- If you were issued a temporary password, enter it (Passwords are CaSE SensitivE!!!) You should see a message similar to this

You are required to change your password immediately (password aged)

WARNING: Your password has expired. You must change your password now and login again! Changing password for user joeuser. Changing password for joeuser (current) UNIX password:

- (current) UNIX password: Enter your temporary password at this prompt and press enter

- New UNIX password: Enter your new strong password and press enter

- Retype new UNIX password: Enter your new strong password again and press enter

- After you enter your new password for the second time and press enter, the shell may exit automatically. If it doesn't, type exit and press enter

- Log in again, this time use your new password

- After successfully logging in for the first tiem, You may see the following message just press ENTER for the next three prompts, don't type any passphrases!

It doesn't appear that you have set up your ssh key.

This process will make the files:

/home/joeuser/.ssh/id_rsa.pub

/home/joeuser/.ssh/id_rsa

/home/joeuser/.ssh/authorized_keys

Generating public/private rsa key pair.

Enter file in which to save the key (/home/joeuser/.ssh/id_rsa):

- Enter file in which to save the key (/home/joeuser/.ssh/id_rsa):Press Enter

- Enter passphrase (empty for no passphrase):Press Enter

- Enter same passphrase again:Press Enter

Your identification has been saved in /home/joeuser/.ssh/id_rsa. Your public key has been saved in /home/joeuser/.ssh/id_rsa.pub. The key fingerprint is: f6:xx:xx:xx:xx:dd:9a:79:7b:83:xx:f9:d7:a7:d6:27 joeuser@cheaha.uabgrid.uab.edu

Congratulations, you should now have a command prompt and be ready to start Sample_Job_Scripts submitting jobs!!!

Hardware

Hardware

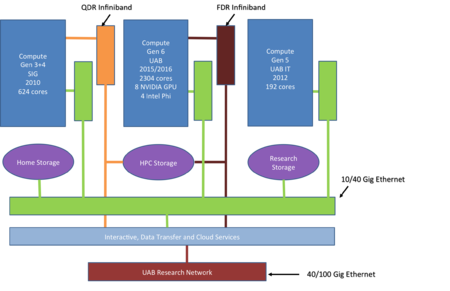

The Cheaha Compute Platform includes three generations of commodity compute hardware, totaling 2340 compute cores, 20 TB of RAM, and over 4.7PB of storage.

The hardware is grouped into generations designated gen3, gen4, gen5 and gen6(oldest to newest). The following descriptions highlight the hardware profile for each generation.

Generation 3 (gen3) -- 48 2x6 core (576 cores total) 2.66 GHz Intel compute nodes with quad data rate Infiniband, ScaleMP, and the high-perf storage build-out for capacity and redundancy with 120TB DDN. This is the hardware collection purchased with a combination of the NIH SIG funds and some of the 2010 annual VPIT investment. These nodes were given the code name "sipsey" and tagged as such in the node naming for the queue system. These nodes are tagged as "sipsey-compute-#-#" in the ROCKS naming convention. 16 of the gen3 nodes (sipsey-compute-0-1 thru sipsey-compute-0-16) were upgraded in 2014 from 48GB to 96GB of memory per node.

Generation 4 (gen4) -- 3 16 core (48 cores total) compute nodes. This hardware collection purchase by Hemant Tiwari of SSG. These nodes were given the code name "ssg" and tagged as such in the node naming for the queue system. These nodes are tagged as "ssg-compute-0-#" in the ROCKS naming convention.

- Generation 6 (gen6) --

- 26 Compute Nodes with two 12 core processors (Intel Xeon E5-2680 v3 2.5GHz) with 256GB DDR4 RAM, FDR InfiniBand and 10GigE network cards

- 14 Compute Nodes with two 12 core processors (Intel Xeon E5-2680 v3 2.5GHz) with 384GB DDR4 RAM, FDR InfiniBand and 10GigE network card

- FDR InfiniBand Switch

- 10Gigabit Ethernet Switch

- Management node and gigabit switch for cluster management

- Bright Advanced Cluster Management software licenses

Summarized, Cheaha's compute pool includes:

- gen4 is 48 cores of 2.70GHz eight-core Intel Xeon E5-2680 processors with 24G of RAM per core or 384GB total

- gen3.1 is 192 cores of 2.67GHz six-core Intel Xeon X5650 processors with 8Gb RAM per core or 96GB total

gen3 is 384 cores of 2.67GHz six-core Intel Xeon X5650 processors with 4Gb RAM per core or 48GB total

| gen | queue | #nodes | cores/node | RAM/node |

|---|---|---|---|---|

| gen6.1 | ?? | 26 | 24 | 256G |

| gen6.2 | ?? | 14 | 24 | 384G |

| gen5 | openstack(?) | ? | ? | ?G |

| gen4 | ssg | 3 | 16 | 385G |

| gen3.1 | sipsey | 16 | 12 | 96G |

| gen3 | sipsey | 32 | 12 | 48G |

| gen2 | cheaha | 24 | 8 | 16G |

Performance

| Generation | Type | Nodes | CPUs per Node | Cores Per CPU | Total Cores | Clock Speed (GHz) | Instructions Per Cycle | Hardware Reference |

|---|---|---|---|---|---|---|---|---|

| Gen 6† | Intel Xeon E5-2680 v3 | 96 | 2 | 12 | 2304 | 2.50 | 16 | Intel Xeon E5-2680 v3 |

| Gen 7†† | Intel Xeon E5-2680 v4 | 18 | 2 | 14 | 504 | 2.40 | 16 | Intel Xeon E5-2680 v4 |

| Gen 8 | Intel Xeon E5-2680 v4 | 35 | 2 | 12 | 840 | 2.50 | 16 | Intel Xeon E5-2680 v3 |

| Gen 9 | Intel Xeon Gold 6248R | 52 | 2 | 24 | 2496 | 3.0 | 16 | 3.0GHz Intel Xeon Gold 6248R |

| Generation | Theoretical Peak Tera-FLOPS |

|---|---|

| Gen 6† | 110 |

| Gen 7†† | 358 |

| Gen 8 | TBD |

| Gen 9 | TBD |

† Includes four Intel Xeon Phi 7210 accelerators and four NVIDIA K80 GPUs.

†† Includes 72 NVIDIA Tesla P100 16GB GPUs.

Cluster Software

- BrightCM 7.2

- CentOS 7.2 x86_64

- SLURM 15.08

Queing System

All work on Cheaha must be submitted to our queing system (SLURM). A common mistake made by new users is to run 'jobs' on the head node or login node. This section gives a basic overview of what a queuing system is and why we use it.

What is a queuing system?

- Software that gives users fair allocation of the cluster's resources

- Schedules jobs based using resource requests (the following are commonly requested resources, there are many more that are available)

- Number of processors (often referred to as "slots")

- Maximum memory (RAM) required per slot

- Maximum run time

- Common queuing systems:

- SLURM

- Sun Grid Engine (Also know as SGE, OGE, GE)

- OpenPBS

- Torque

- LSF (load sharing facility)

SLURM is a queue management system and stands for Simple Linux Utility for Resource Management. SLURM was developed at the Lawrence Livermore National Lab and currently runs some of the largest compute clusters in the world. SLURM is now the primary job manager on Cheaha, it replaces SUN Grid Engine (SGE) the job manager used earlier.

Typical Workflow

- Stage data to $USER_SCRATCH (your scratch directory)

- Research how to run your code in "batch" mode. Batch mode typically means the ability to run it from the command line without requiring any interaction from the user.

- Identify the appropriate resources needed to run the job. The following are mandatory resource requests for all jobs on Cheaha

- Maximum memory (RAM) required per slot

- Maximum runtime

- Write a job script specifying queuing system parameters, resource requests and commands to run program

- Submit script to queuing system (sbatch script.job)

- Monitor job (squeue)

- Review the results and resubmit as necessary

- Clean up the scratch directory by moving or deleting the data off of the cluster

Resource Requests

Accurate resource requests are extremely important to the health of the over all cluster. In order for Cheaha to operate properly, the queing system must know how much runtime and RAM each job will need.

Mandatory Resource Requests

* ********** Need to edit

Other Common Resource Requests

* ********** Need to edit

Submitting Jobs

Batch Jobs are submitted on Cheaha by using the "sbatch" command. The full manual for sbtach is available by running the following command

man sbatch

Job Script File Format

To submit a job to the queuing systems, you will first define your job in a script (a text file) and then submit that script to the queuing system.

The script file needs to be formatted as a UNIX file, not a Windows or Mac text file. In geek speak, this means that the end of line (EOL) character should be a line feed (LF) rather than a carriage return line feed (CRLF) for Windows or carriage return (CR) for Mac.

If you submit a job script formatted as a Windows or Mac text file, your job will likely fail with misleading messages, for example that the path specified does not exist.

Windows Notepad does not have the ability to save files using the UNIX file format. Do NOT use Notepad to create files intended for use on the clusters. Instead use one of the alternative text editors listed in the following section.

Converting Files to UNIX Format

Dos2Unix Method

The lines below that begin with $ are commands, the $ represents the command prompt and should not be typed!

The dos2unix program can be used to convert Windows text files to UNIX files with a simple command. After you have copied the file to your home directory on the cluster, you can identify that the file is a Windows file by executing the following (Windows uses CR LF as the line terminator, where UNIX uses only LF and Mac uses only CR):

$ file testfile.txt testfile.txt: ASCII text, with CRLF line terminators

Now, convert the file to UNIX

$ dos2unix testfile.txt dos2unix: converting file testfile.txt to UNIX format ...

Verify the conversion using the file command

$ file testfile.txt testfile.txt: ASCII text

Alternative Windows Text Editors

There are many good text editors available for Windows that have the capability to save files using the UNIX file format. Here are a few:

- [Geany] is an excellent free text editor for Windows and Linux that supports Windows, UNIX and Mac file formats, syntax highlighting and many programming features. To convert from Windows to UNIX click Document click Set Line Endings and then Convert and Set to LF (Unix)

- [Notepad++] is a great free Windows text editor that supports Windows, UNIX and Mac file formats, syntax highlighting and many programming features. To convert from Windows to UNIX click Format and then click Convert to UNIX Format

- [TextPad] is another excellent Windows text editor. TextPad is not free, however.

Example Batch Job Script

A shared cluster environment like Cheaha uses a job scheduler to run tasks on the cluster to provide optimal resource sharing among users. Cheaha uses a job scheduling system call SGE to schedule and manage jobs. A user needs to tell SGE about resource requirements (e.g. CPU, memory) so that it can schedule jobs effectively. These resource requirements along with actual application code can be specified in a single file commonly referred as 'Job Script/File'. Following is a simple job script that prints job number and hostname.

#!/bin/bash # #SBATCH --job-name=test #SBATCH --output=res.txt #SBATCH --ntasks=1 #SBATCH --time=10:00 #SBATCH --mem-per-cpu=100 #SBATCH --mail-type=FAIL #SBATCH --mail-user=$USER@uab.edu srun hostname srun sleep 60

Lines starting with '#SBATCH' have a special meaning in the SLURM world. SLURM specific configuration options are specified after the '#SBATCH' characters. Above configuration options are useful for most job scripts and for additional configuration options refer to SLURM commands manual. A job script is submitted to the cluster using SLURM specific commands. There are many commands available, but following three commands are the most common:

- sbatch - to submit job

- scancel - to delete job

- squeue - to view job status

We can submit above job script using sbatch command:

$ sbatch HelloCheaha.sh Submitted batch job 52707

When the job script is submitted, SLURM queues it up and assigns it a job number (e.g. 52707 in above example). The job number is available inside job script using environment variable $JOB_ID. This variable can be used inside job script to create job related directory structure or file names.

Interactive Resources

Login Node (the host that you connected to when you setup the SSH connection to Cheaha) is supposed to be used for submitting jobs and/or lighter prep work required for the job scripts. Do not run heavy computations on the login node. If you have a heavier workload to prepare for a batch job (eg. compiling code or other manipulations of data) or your compute application requires interactive control, you should request a dedicated interactive node for this work.

Interactive resources are requested by submitting an "interactive" job to the scheduler. Interactive jobs will provide you a command line on a compute resource that you can use just like you would the command line on the login node. The difference is that the scheduler has dedicated the requested resources to your job and you can run your interactive commands without having to worry about impacting other users on the login node.

Interactive jobs, that can be run on command line, are requested with the srun command.

srun --ntasks=4 --mem-per-cpu=4096 --time=08:00:00 --partition=medium --job-name=JOB_NAME --pty /bin/bash

This command requests for 4 cores (--ntasks) with each task requesting size 4GB of RAM for 8 hrs (--time).

More advanced interactive scenarios to support graphical applications are available using VNC or X11 tunneling X-Win32 2014 for Windows

Interactive jobs that requires running a graphical application, are requested with the sinteractive command, via Terminal on your VNC window.

Storage

No Automatic Backups

There is no automatic back up of any data on the cluster (home, scratch, or whatever). All data back up is managed by you. If you aren't managing a data back up process, then you have no backup data.

Home directories

Your home directory on Cheaha is NFS-mounted to the compute nodes as /home/$USER or $HOME. It is acceptable to use your home directory a location to store job scripts, custom code, libraries, job scripts.

The home directory must not be used to store large amounts of data. Please use $USER_SCRATCH for actively used data sets or request shared scratch space for shared data sets.

Scratch

Research Computing policy requires that all bulky input and output must be located on the scratch space. The home directory is intended to store your job scripts, log files, libraries and other supporting files.

Important Information:

- Scratch space (network and local) is not backed up.

- Research Computing expects each user to keep their scratch areas clean. The cluster scratch area are not to be used for archiving data.

Cheaha has two types of scratch space, network mounted and local.

- Network scratch ($USER_SCRATCH) is available on the head node and each compute node. This storage is a Lustre high performance file system providing roughly 240TB of storage. This should be your jobs primary working directory, unless the job would benefit from local scratch (see below).

- Local scratch is physically located on each compute node and is not accessible to the other nodes (including the head node). This space is useful if the job performs a lot of file I/O. Most of the jobs that run on our clusters do not fall into this category. Because the local scratch is inaccessible outside the job, it is important to note that you must move any data between local scratch to your network accessible scratch within your job. For example, step 1 in the job could be to copy the input from $USER_SCRATCH to ${USER_SCRATCH}, step 2 code execution, step 3 move the data back to $USER_SCRATCH.

Network Scratch

Network scratch is available using the environment variable $USER_SCRATCH or directly by /scratch/user/${USER}

It is advisable to use the environment variable whenever possible rather than the hard coded path.

Local Scratch

Each compute node has a local scratch directory that is accessible via the scheduler variable $TMPDIR. If your job performs a lot of file I/O, the job should use $TMPDIR rather than $USER_SCRATCH to prevent bogging down the network scratch fil system. The amount of scratch space available on each compute node is approximately 40GB.

The $TMPDIR is a special temporary directory created by the scheduler uniq for the job. It's important to note that this directory is deleted when the job completes, so the job script has to move the results to $USER_SCRATCH or other location prior to the job exiting.

Note that $TMPDIR is only useful for jobs in which all processes run on the same compute node, so MPI jobs are not candidates for this solution.

The following is an array job example that uses $TMPDIR by transferring the inputs into $TMPDIR at the beginning of the script and the result out of $TMPDIR at the end of the script.

#!/bin/bash #$ -S /bin/bash #$ -cwd # #$ -N local_scratch_example #$ -pe smp 1 #$ -t 1-1000 # Maximum Job Runtime (ex: 20 minutes) #$ -l h_rt=00:20:00 # Maximum memory needed per slot (ex: 2 GB) #$ -l vf=2G # #$ -j y # #$ -M YOUR_EMAIL_ADDRESS #$ -m eas # # Load modules if necessary source /etc/profile.d/modules.sh module load R/R-2.9.0 echo "TMPDIR: $TMPDIR" cd $TMPDIR # Create a working directory under the special scheduler local scratch directory # using the array job's taskID mdkir $SGE_TASK_ID cd $SGE_TASK_ID # Next copy the input data to the local scratch echo "Copying input data from network scratch to $TMPDIR/$SGE_TASK_ID - $(date) # The input data in this case has a numerical file extension that # matches $SGE_TASK_ID cp -a $USER_SCRATCH/GeneData/INP*.$SGE_TASK_ID ./ echo "copied input data from network scratch to $TMPDIR/$SGE_TASK_ID - $(date) someapp -S 1 -D 10 -i INP*.$SGE_TASK_ID -o geneapp.out.$SGE_TASK_ID # Lastly copy the results back to network scratch echo "Copying results from local $TMPDIR/$SGE_TASK_ID to network - $(date) cp -a geneapp.out.$SGE_TASK_ID $USER_SCRATCH/GeneData/ echo "Copied results from local $TMPDIR/$SGE_TASK_ID to network - $(date)

Project Storage

Cheaha has a location where shared data can be stored called $SHARE_SCRATCH . As with user scratch, this area is not backed up!

This is helpful if a team of researchers must access the same data. Please open a help desk ticket to request a project directory under $SHARE_SCRATCH.

Uploading Data

Data can be moved onto the cluster (pushed) from a remote client (ie. you desktop) via SCP or SFTP. Data can also be downloaded to the cluster (pulled) by issuing transfer commands once you are logged into the cluster. Common transfer methods are `wget <URL>`, FTP, or SCP, and depend on how the data is made available from the data provider.

Large data sets should be staged directly to your $USER_SCRATCH directory so as not to fill up $HOME. If you are working on a data set shared with multiple users, it's preferable to request space in $SHARE_SCRATCH rather than duplicating the data for each user.

Environment Modules

Environment Modules is installed on Cheaha and should be used when constructing your job scripts if an applicable module file exists. Using the module command you can easily configure your environment for specific software packages without having to know the specific environment variables and values to set. Modules allows you to dynamically configure your environment without having to logout / login for the changes to take affect.

If you find that specific software does not have a module, please submit a helpdesk ticket to request the module.

Note: If you are using LAM MPI for parallel jobs, you must load the LAM module in both your job script and your profile. For example, assume we want to use LAM-MPI compiled for GNU:

- for BASH users add this to your ~/.bashrc and your job script, or for CSH users add this to your ~/.cshrc and your job script

module load lammpi/lam-7.1-gnu

- Cheaha supports bash completion for the module command. For example, type 'module' and press the TAB key twice to see a list of options:

module TAB TAB add display initlist keyword refresh switch use apropos help initprepend list rm unload whatis avail initadd initrm load show unuse clear initclear initswitch purge swap update

- To see the list of available modulefiles on the cluster, run the module avail command (note the example list below may not be complete!) or module load followed by two tab key presses:

module avail ----------------------------------------------------------------------------------------- /cm/shared/modulefiles ----------------------------------------------------------------------------------------- acml/gcc/64/5.3.1 acml/open64-int64/mp/fma4/5.3.1 fftw2/openmpi/gcc/64/float/2.1.5 intel-cluster-runtime/ia32/3.8 netcdf/gcc/64/4.3.3.1 acml/gcc/fma4/5.3.1 blacs/openmpi/gcc/64/1.1patch03 fftw2/openmpi/open64/64/double/2.1.5 intel-cluster-runtime/intel64/3.8 netcdf/open64/64/4.3.3.1 acml/gcc/mp/64/5.3.1 blacs/openmpi/open64/64/1.1patch03 fftw2/openmpi/open64/64/float/2.1.5 intel-cluster-runtime/mic/3.8 netperf/2.7.0 acml/gcc/mp/fma4/5.3.1 blas/gcc/64/3.6.0 fftw3/openmpi/gcc/64/3.3.4 intel-tbb-oss/ia32/44_20160526oss open64/4.5.2.1 acml/gcc-int64/64/5.3.1 blas/open64/64/3.6.0 fftw3/openmpi/open64/64/3.3.4 intel-tbb-oss/intel64/44_20160526oss openblas/dynamic/0.2.15 acml/gcc-int64/fma4/5.3.1 bonnie++/1.97.1 gdb/7.9 iozone/3_434 openmpi/gcc/64/1.10.1 acml/gcc-int64/mp/64/5.3.1 cmgui/7.2 globalarrays/openmpi/gcc/64/5.4 lapack/gcc/64/3.6.0 openmpi/open64/64/1.10.1 acml/gcc-int64/mp/fma4/5.3.1 cuda75/blas/7.5.18 globalarrays/openmpi/open64/64/5.4 lapack/open64/64/3.6.0 pbspro/13.0.2.153173 acml/open64/64/5.3.1 cuda75/fft/7.5.18 hdf5/1.6.10 mpich/ge/gcc/64/3.2 puppet/3.8.4 acml/open64/fma4/5.3.1 cuda75/gdk/352.79 hdf5_18/1.8.16 mpich/ge/open64/64/3.2 rc-base acml/open64/mp/64/5.3.1 cuda75/nsight/7.5.18 hpl/2.1 mpiexec/0.84_432 scalapack/mvapich2/gcc/64/2.0.2 acml/open64/mp/fma4/5.3.1 cuda75/profiler/7.5.18 hwloc/1.10.1 mvapich/gcc/64/1.2rc1 scalapack/openmpi/gcc/64/2.0.2 acml/open64-int64/64/5.3.1 cuda75/toolkit/7.5.18 intel/compiler/32/15.0/2015.5.223 mvapich/open64/64/1.2rc1 sge/2011.11p1 acml/open64-int64/fma4/5.3.1 default-environment intel/compiler/64/15.0/2015.5.223 mvapich2/gcc/64/2.2b slurm/15.08.6 acml/open64-int64/mp/64/5.3.1 fftw2/openmpi/gcc/64/double/2.1.5 intel-cluster-checker/2.2.2 mvapich2/open64/64/2.2b torque/6.0.0.1 ---------------------------------------------------------------------------------------- /share/apps/modulefiles ----------------------------------------------------------------------------------------- rc/BrainSuite/15b rc/freesurfer/freesurfer-5.3.0 rc/intel/compiler/64/ps_2016/2016.0.047 rc/matlab/R2015a rc/SAS/v9.4 rc/cmg/2012.116.G rc/gromacs-intel/5.1.1 rc/Mathematica/10.3 rc/matlab/R2015b rc/dsistudio/dsistudio-20151020 rc/gtool/0.7.5 rc/matlab/R2012a rc/MRIConvert/2.0.8 --------------------------------------------------------------------------------------- /share/apps/rc/modules/all --------------------------------------------------------------------------------------- AFNI/linux_openmp_64-goolf-1.7.20-20160616 gperf/3.0.4-intel-2016a MVAPICH2/2.2b-GCC-4.9.3-2.25 Amber/14-intel-2016a-AmberTools-15-patchlevel-13-13 grep/2.15-goolf-1.4.10 NASM/2.11.06-goolf-1.7.20 annovar/2016Feb01-foss-2015b-Perl-5.22.1 GROMACS/5.0.5-intel-2015b-hybrid NASM/2.11.08-foss-2015b ant/1.9.6-Java-1.7.0_80 GSL/1.16-goolf-1.7.20 NASM/2.11.08-intel-2016a APBS/1.4-linux-static-x86_64 GSL/1.16-intel-2015b NASM/2.12.02-foss-2016a ASHS/rev103_20140612 GSL/2.1-foss-2015b NASM/2.12.02-intel-2015b Aspera-Connect/3.6.1 gtool/0.7.5_linux_x86_64 NASM/2.12.02-intel-2016a ATLAS/3.10.1-gompi-1.5.12-LAPACK-3.4.2 guile/1.8.8-GNU-4.9.3-2.25 ncurses/5.9-foss-2015b Autoconf/2.69-foss-2016a HAPGEN2/2.2.0 ncurses/5.9-GCC-4.8.4 Autoconf/2.69-GCC-4.8.4 HarfBuzz/1.2.7-intel-2016a ncurses/5.9-GNU-4.9.3-2.25 Autoconf/2.69-GNU-4.9.3-2.25 HDF5/1.8.15-patch1-intel-2015b ncurses/5.9-goolf-1.4.10 . . . .

Some software packages have multiple module files, for example:

- GCC/4.7.2

- GCC/4.8.1

- GCC/4.8.2

- GCC/4.8.4

- GCC/4.9.2

- GCC/4.9.3

- GCC/4.9.3-2.25

In this case, the GCC module will always load the latest version, so loading this module is equivalent to loading GCC/4.9.3-2.25. If you always want to use the latest version, use this approach. If you want use a specific version, use the module file containing the appropriate version number.

Some modules, when loaded, will actually load other modules. For example, the GROMACS/5.0.5-intel-2015b-hybrid module will also load intel/2015b and other related tools.

- To load a module, ex: for a GROMACS job, use the following module load command in your job script:

module load GROMACS/5.0.5-intel-2015b-hybrid

- To see a list of the modules that you currently have loaded use the module list command

module list Currently Loaded Modulefiles: 1) slurm/15.08.6 9) impi/5.0.3.048-iccifort-2015.3.187-GNU-4.9.3-2.25 17) Tcl/8.6.3-intel-2015b 2) rc-base 10) iimpi/7.3.5-GNU-4.9.3-2.25 18) SQLite/3.8.8.1-intel-2015b 3) GCC/4.9.3-binutils-2.25 11) imkl/11.2.3.187-iimpi-7.3.5-GNU-4.9.3-2.25 19) Tk/8.6.3-intel-2015b-no-X11 4) binutils/2.25-GCC-4.9.3-binutils-2.25 12) intel/2015b 20) Python/2.7.9-intel-2015b 5) GNU/4.9.3-2.25 13) bzip2/1.0.6-intel-2015b 21) Boost/1.58.0-intel-2015b-Python-2.7.9 6) icc/2015.3.187-GNU-4.9.3-2.25 14) zlib/1.2.8-intel-2015b 22) GROMACS/5.0.5-intel-2015b-hybrid 7) ifort/2015.3.187-GNU-4.9.3-2.25 15) ncurses/5.9-intel-2015b 8) iccifort/2015.3.187-GNU-4.9.3-2.25 16) libreadline/6.3-intel-2015b

- A module can be removed from your environment by using the module unload command:

module unload GROMACS/5.0.5-intel-2015b-hybrid

- The definition of a module can also be viewed using the module show command, revealing what a specific module will do to your environment:

module show GROMACS/5.0.5-intel-2015b-hybrid ------------------------------------------------------------------- /share/apps/rc/modules/all/GROMACS/5.0.5-intel-2015b-hybrid: module-whatis GROMACS is a versatile package to perform molecular dynamics, i.e. simulate the Newtonian equations of motion for systems with hundreds to millions of particles. - Homepage: http://www.gromacs.org conflict GROMACS prepend-path CPATH /share/apps/rc/software/GROMACS/5.0.5-intel-2015b-hybrid/include prepend-path LD_LIBRARY_PATH /share/apps/rc/software/GROMACS/5.0.5-intel-2015b-hybrid/lib64 prepend-path LIBRARY_PATH /share/apps/rc/software/GROMACS/5.0.5-intel-2015b-hybrid/lib64 prepend-path MANPATH /share/apps/rc/software/GROMACS/5.0.5-intel-2015b-hybrid/share/man prepend-path PATH /share/apps/rc/software/GROMACS/5.0.5-intel-2015b-hybrid/bin prepend-path PKG_CONFIG_PATH /share/apps/rc/software/GROMACS/5.0.5-intel-2015b-hybrid/lib64/pkgconfig setenv EBROOTGROMACS /share/apps/rc/software/GROMACS/5.0.5-intel-2015b-hybrid setenv EBVERSIONGROMACS 5.0.5 setenv EBDEVELGROMACS /share/apps/rc/software/GROMACS/5.0.5-intel-2015b-hybrid/easybuild/GROMACS-5.0.5-intel-2015b-hybrid-easybuild-devel -------------------------------------------------------------------

Error Using Modules from a Job Script

If you are using modules and the command your job executes runs fine from the command line but fails when you run it from the job, you may be having an issue with the script initialization. If you see this error in your job error output file

-bash: module: line 1: syntax error: unexpected end of file -bash: error importing function definition for `BASH_FUNC_module'

Add the command `unset module` before calling your module files. The -V job argument will cause a conflict with the module function used in your script.

Sample Job Scripts

The following are sample job scripts, please be careful to edit these for your environment (i.e. replace YOUR_EMAIL_ADDRESS with your real email address), set the h_rt to an appropriate runtime limit and modify the job name and any other parameters.

Hello World is the classic example used throughout programming. We don't want to buck the system, so we'll use it as well to demonstrate jobs submission with one minor variation: our hello world will send us a greeting using the name of whatever machine it runs on. For example, when run on the Cheaha head node, it would print "Hello from cheaha.uabgrid.uab.edu".

Hello World (serial)

A serial job is one that can run independently of other commands, ie. it doesn't depend on the data from other jobs running simultaneously. You can run many serial jobs in any order. This is a common solution to processing lots of data when each command works on a single piece of data. For example, running the same conversion on 100's of images.

Here we show how to create job script for one simple command. Running more than one command just requires submitting more jobs.

- Create your hello world application. Run this command to create a script, turn it into to a command, and run the command (just copy and past the following on to the command line).

cat > helloworld.sh << EOF echo Hello from `hostname` EOF chmod +x helloworld.sh ./helloworld.sh

- Create the Grid Engine job script that will request 1 cpu slots and a maximum runtime of 10 minutes

$ vi helloworld.job

#!/bin/bash # # Define the shell used by your compute job # #$ -S /bin/bash # # Tell the cluster to run in the current directory from where you submit the job # #$ -cwd # # Name your job to make it easier for you to track # #$ -N HelloWorld_serial # # Tell the scheduler only need 10 minutes # #$ -l h_rt=00:10:00,s_rt=0:08:00,vf=256M # # Set your email address and request notification when you job is complete or if it fails # #$ -M YOUR_EMAIL_ADDRESS #$ -m eas # # # Load the appropriate module files # # (no module is needed for this example, normally an appropriate module load command appears here) # # Tell the scheduler to use the environment from your current shell # #$ -V ./helloworld.sh

- Submit the job to Grid Engine and check the status using qstat

$ qsub helloworld.job

Your job 11613 ("HelloWorld") has been submitted

$ qstat -u $USER

job-ID prior name user state submit/start at queue slots ja-task-ID

-----------------------------------------------------------------------------------------------------------------

11613 8.79717 HelloWorld jsmith r 03/13/2009 09:24:35 all.q@compute-0-3.local 1

- When the job completes, you should have output files named HelloWorld.o* and HelloWorld.e* (replace the asterisk with the job ID, example HelloWorld.o11613). The .o file is the standard output from the job and .e will contain any errors.

$ cat HelloWorld.o11613 Hello World!

Hello World (parallel with MPI)

MPI is used to coordinate the activity of many computations occurring in parallel. It is commonly used in simulation software for molecular dynamics, fluid dynamics, and similar domains where there is significant communication (data) exchanged between cooperating process.

Here is a simple parallel Grid Engine job script for running commands the rely on MPI. This example also includes the example of compiling the code and submitting the job script to the Grid Engine.

- First, create a directory for the Hello World jobs

$ mkdir -p ~/jobs/helloworld $ cd ~/jobs/helloworld

- Create the Hello World code written in C (this example of MPI enabled Hello World includes a 3 minute sleep to ensure the job runs for several minutes, a normal hello world example would run in a matter of seconds).

$ vi helloworld-mpi.c

#include <stdio.h>

#include <mpi.h>

main(int argc, char **argv)

{

int node;

int i, j;

float f;

MPI_Init(&argc,&argv);

MPI_Comm_rank(MPI_COMM_WORLD, &node);

printf("Hello World from Node %d.\n", node);

sleep(180);

for (j=0; j<=100000; j++)

for(i=0; i<=100000; i++)

f=i*2.718281828*i+i+i*3.141592654;

MPI_Finalize();

}

- Compile the code, first purging any modules you may have loaded followed by loading the module for OpenMPI GNU. The mpicc command will compile the code and produce a binary named helloworld_gnu_openmpi

$ module purge $ module load openmpi/openmpi-gnu $ mpicc helloworld-mpi.c -o helloworld_gnu_openmpi

- Create the Grid Engine job script that will request 8 cpu slots and a maximum runtime of 10 minutes

$ vi helloworld.job

#$ -S /bin/bash #$ -cwd # #$ -N HelloWorld #$ -pe openmpi 8 #$ -l h_rt=00:10:00,s_rt=0:08:00,vf=1G #$ -j y # #$ -M YOUR_EMAIL_ADDRESS #$ -m eas # # Load the appropriate module files module load openmpi/openmpi-gnu #$ -V mpirun -np $NSLOTS helloworld_gnu_openmpi

- Submit the job to Grid Engine and check the status using qstat

$ qsub helloworld.job

Your job 11613 ("HelloWorld") has been submitted

$ qstat -u $USER

job-ID prior name user state submit/start at queue slots ja-task-ID

-----------------------------------------------------------------------------------------------------------------

11613 8.79717 HelloWorld jsmith r 03/13/2009 09:24:35 all.q@compute-0-3.local 8

- When the job completes, you should have output files named HelloWorld.o* and HelloWorld.po* (replace the asterisk with the job ID, example HelloWorld.o11613). The .o file is the standard output from the job and .po will contain any errors.

$ cat HelloWorld.o11613 Hello world! I'm 0 of 8 on compute-0-3.local Hello world! I'm 1 of 8 on compute-0-3.local Hello world! I'm 4 of 8 on compute-0-3.local Hello world! I'm 6 of 8 on compute-0-6.local Hello world! I'm 5 of 8 on compute-0-3.local Hello world! I'm 7 of 8 on compute-0-6.local Hello world! I'm 2 of 8 on compute-0-3.local Hello world! I'm 3 of 8 on compute-0-3.local

Hello World (mini-cluster environment)

You may have a computing problem that needs several computers at the same time but it isn't an MPI based solution. In other words, sometimes you just need a cluster to run your work on because you have tasks that run on different computers. SGE and OpenMPI make it easy to reserve your own cluster.

This example illustrates how to set up the request for your mini-cluster.

We start by making a slight modification to our submit script to request more than one computer at a time and then run our serial command on sever machines at the same time. This example basically takes the qsub script from the MPI example and executes the helloworld.sh command using mpirun. mpirun is actually a utility that helps you start processes on different computers and provides information that you command can use if it is compiled with MPI libraries. In this example, we are just using it as a tool to control the cluster we just requested. Think of mpirun as a subscheduler that controls the mini-cluster you requested via SGE.

Edit the qsub script from the MPI example above to call your helloworld.sh script instead of the MPI-based helloworld we built above. Change the mpirun line in the qsub:

mpirun -np $NSLOTS helloworld_gnu_openmpi

to the following

mpirun -np $NSLOTS hostname

Once you've made this change you can run your qsub again and this time you'll see output from helloworld.sh as it was run on each node in your mini-cluster.

$ qsub helloworld.job

Your job 8143408 ("HelloWorld") has been submitted

$ qstat

job-ID prior name user state submit/start at queue slots ja-task-ID

----------------------------------------------------------------------------------------------------------------

8143408 0.00000 HelloWorld jpr qw 09/16/2011 11:02:01 8

If you want to change the size of your cluster, just specify a different size on the command line when you submit the job via qsub. Command line arguments to qsub override the settings inside you qsub script.

$ qsub -pe openmpi 2

Typically, if you are requesting your own mini-cluster you are usually not interested in running the exact same command on each node in your cluster. You could use the regular serial commands for that. Ordinarily, you will want to start different commands on different parts of your cluster, like you would in the Hadoop example.

Hello World (serial) -- revisited

The job submit scripts (qsub scripts) are actually bash shell scripts in their own right. The reason for using the funky #$ prefix in the scripts is so that bash interprets any such line as a comment and won't execute it. Because the # character starts a comment in bash, we can weave the scheduler directives (the #$ lines) into standard bash scripts. This lets us build scripts that we can execute locally and then easily run the same script to on a cluster node by calling it with qsub. This can be used to our advantage to create a more fluid experience moving between development and production job runs.

The following example is a simple variation on the serial job above. All we will do is convert our qsub script into a command called helloworld that calls the helloworld.sh command.

If the first line of a file is #!/bin/bash and that file is executable, the shell will automatically run the command as if were any other system command, eg. ls. That is, the ".sh" extension on our HelloWorld.sh script is completely optional and is only meaningful to the user.

Copy the serial helloworld.job script to a new file, add a the special #!/bin/bash as the first line, and make it executable with the following command (note: those are single quotes in the echo command):

echo '#!/bin/bash' | cat helloworld.job > helloworld ; chmod +x helloworld

Our qsub script has now become a regular command. We can now execute the command with the simple prefix "./helloworld", which means "execute this file in the current directory":

./helloworld Hello from cheaha.uabgrid.uab.edu

Or if we want to run the command on a compute node, replace the "./" prefix with "qsub ":

$ qsub helloworld

Your job 8143171 ("HelloWorld_serial") has been submitted

And when the cluster run is complete you can look at the content of the output:

$ cat HelloWorld_serial.o8143171 Hello from sipsey-compute-0-5.local

You can use this approach of treating you qsub files as command wrappers to build a collection of commands that can be executed locally or via qsub. The other examples can be restructured similarly.

To avoid having to use the "./" prefix, just add the current directory to your PATH. Also, if you plan to do heavy development using this feature on the cluster, please be sure to run qrsh first so you don't load the head node with our development work.

Gromacs

#!/bin/bash

#$ -S /bin/bash

#

# Request the maximum runtime for the job

#$ -l h_rt=2:00:00,s_rt=1:55:00

#

# Request the maximum memory needed for each slot / processor core

#$ -l vf=256M

#

# Send mail only when the job ends

#$ -m e

#

# Execute from the current working directory

#$ -cwd

#

#$ -j y

#

# Job Name and email

#$ -N G-4CPU-intel

#$ -M YOUR_EMAIL_ADDRESS

#

# Use OpenMPI parallel environment and 4 slots

#$ -pe openmpi* 4

#

# Load the appropriate module(s)

. /etc/profile.d/modules.sh

module load gromacs/gromacs-4-intel

#

#$ -V

#

# Change directory to the job working directory if not already there

cd ${USER_SCRATCH}/jobs/gromacs

# Single precision

MDRUN=mdrun_mpi

# The $NSLOTS variable is set automatically by SGE to match the number of

# slots requests

export MYFILE=production-Npt-323K_${NSLOTS}CPU

mpirun -np $NSLOTS $MDRUN -v -np $NSLOTS -s $MYFILE -o $MYFILE -c $MYFILE -x $MYFILE -e $MYFILE -g ${MYFILE}.log

R

If you are using LAM MPI for parallel jobs, you must add the following two lines to your ~/.bashrc or ~/.cshrc file.

module load lammpi/lam-7.1-gnu

The following is an example job script that will use an array of 1000 tasks (-t 1-1000), each task has a max runtime of 2 hours and will use no more than 256 MB of RAM per task (h_rt=2:00:00,vf=256M)

The array is also throttled to only run 32 concurrent tasks at any time (-tc 32), this feature is not available on coosa.

More R examples are available here: Running R Jobs on a Rocks Cluster

Create a working directory and the job submission script

$ mkdir -p ~/jobs/ArrayExample $ cd ~/jobs/ArrayExample $ vi R-example-array.job

#!/bin/bash #$ -S /bin/bash # # Request the maximum runtime for the job #$ -l h_rt=2:00:00,s_rt=1:55:00 # # Request the maximum memory needed for each slot / processor core #$ -l vf=256M # #$ -M YOUR_EMAIL_ADDRESS # Email me only when tasks abort, use '#$ -m n' to disable all email for this job #$ -m a #$ -cwd #$ -j y # # Job Name #$ -N ArrayExample # #$ -t 1-1000 #$ -tc 32 # #$ -e $HOME/negcon/rep$TASK_ID/$JOB_NAME.e$JOB_ID.$TASK_ID #$ -o $HOME/negcon/rep$TASK_ID/$JOB_NAME.o$JOB_ID.$TASK_ID . /etc/profile.d/modules.sh module load R/R-2.9.0 #$ -v PATH,R_HOME,R_LIBS,LD_LIBRARY_PATH,CWD cd ~/jobs/ArrayExample/rep$SGE_TASK_ID R CMD BATCH rscript.R

Submit the job to the Grid Engine and check the status of the job using the qstat command

$ qsub R-example-array.job $ qstat

Installed Software

A partial list of installed software with additional instructions for their use is available on the Cheaha Software page.