Cheaha: Difference between revisions

Jpr@uab.edu (talk | contribs) (→Software: Updates to improve better highlight the eng cheaha page, document use of modules, and improve wording.) |

(Changed support@vo.uabgrid.uab.edu to support@listserv.uab.edu) |

||

| (89 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

'''Cheaha''' is a campus resource dedicated to enhancing research computing productivity at UAB. Cheaha is | {{Main_Banner}} | ||

'''Cheaha''' is a campus resource dedicated to enhancing research computing productivity at UAB. [http://cheaha.uabgrid.uab.edu Cheaha] is managed by [http://www.uab.edu/it UAB Information Technology's Research Computing group (UAB ITRC)] and is available to members of the UAB community in need of increased computational capacity. Cheaha supports [http://en.wikipedia.org/wiki/High-performance_computing high-performance computing (HPC)] and [http://en.wikipedia.org/wiki/High-throughput_computing high throughput computing (HTC)] paradigms. | |||

Cheaha | Cheaha provides users with a traditional command-line interactive environment with access to many scientific tools that can leverage its dedicated pool of local compute resources. Alternately, users of graphical applications can start a [[Setting_Up_VNC_Session|cluster desktop]]. The local compute pool provides access to compute hardware based on the [http://en.wikipedia.org/wiki/X86_64 x86-64 64-bit architecture]. The compute resources are organized into a unified Research Computing System. The compute fabric for this system is anchored by the Cheaha cluster, [[ Resources |a commodity cluster with approximately 2400 cores]] connected by low-latency Fourteen Data Rate (FDR) InfiniBand networks. The compute nodes are backed by 6.6PB raw GPFS storage on DDN SFA12KX hardware, an additional 20TB available for home directories on a traditional Hitachi SAN, and other ancillary services. The compute nodes combine to provide over 110TFlops of dedicated computing power. | ||

Cheaha is composed of resources that span data centers located in the UAB Shared Computing facility UAB 936 Building and the RUST Computer Center. Resource design and development is lead by UAB IT Research Computing in open collaboration with community members. Operational [mailto:support@listserv.uab.edu support] is provided by UAB IT's Research Computing group. | |||

Cheaha is | Cheaha is named in honor of [http://en.wikipedia.org/wiki/Cheaha_Mountain Cheaha Mountain], the highest peak in the state of Alabama. Cheaha is a popular destination whose summit offers clear vistas of the surrounding landscape. (Cheaha Mountain photo-streams on [http://www.flickr.com/search/?q=cheaha Flikr] and [http://picasaweb.google.com/lh/view?q=cheaha&psc=G&filter=1# Picasa]). | ||

== Using == | |||

=== Getting Started === | |||

For information on getting an account, logging in, and running a job, please see [[Cheaha2_GettingStarted|Getting Started]]. | |||

== History == | == History == | ||

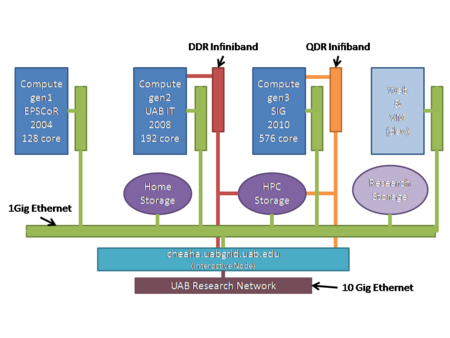

[[Image: | [[Image:Research-computing-platform.png|right|thumb|450px|Logical Diagram of Cheaha Configuration]] | ||

=== 2005 === | |||

In 2002 UAB was awarded an infrastructure development grant through the NSF EPsCoR program. This led to the 2005 acquisition of a 64 node compute cluster with two AMD Opteron 242 1.6Ghz CPUs per node (128 total cores). This cluster was named Cheaha. Cheaha expanded the compute capacity available at UAB and was the first general-access resource for the community. It lead to expanded roles for UAB IT in research computing support through the development of the UAB Shared HPC Facility in BEC and provided further engagement in Globus-based grid computing resource development on campus via UABgrid and regionally via [http://www.suragrid.org SURAgrid]. | In 2002 UAB was awarded an infrastructure development grant through the NSF EPsCoR program. This led to the 2005 acquisition of a 64 node compute cluster with two AMD Opteron 242 1.6Ghz CPUs per node (128 total cores). This cluster was named Cheaha. Cheaha expanded the compute capacity available at UAB and was the first general-access resource for the community. It lead to expanded roles for UAB IT in research computing support through the development of the UAB Shared HPC Facility in BEC and provided further engagement in Globus-based grid computing resource development on campus via UABgrid and regionally via [http://www.suragrid.org SURAgrid]. | ||

=== 2008 | === 2008 === | ||

In 2008, money was allocated by UAB IT for hardware upgrades which lead to the acquisition of an additional 192 cores based on a Dell clustering solution with Intel Quad-Core E5450 3.0Ghz CPU in August of 2008. This uprade migrated Cheaha's core infrastructure to the Dell blade clustering solution. It provided a 3 fold increase in processor density over the original hardware and enables more computing power to be located in the same physical space with room for expansion, an important consideration in light of the continued growth in processing demand. This hardware represented a major technology upgrade that included space for additional expansion to address over-all capacity demand and enable resource reservation. | In 2008, money was allocated by UAB IT for hardware upgrades which lead to the acquisition of an additional 192 cores based on a Dell clustering solution with Intel Quad-Core E5450 3.0Ghz CPU in August of 2008. This uprade migrated Cheaha's core infrastructure to the Dell blade clustering solution. It provided a 3 fold increase in processor density over the original hardware and enables more computing power to be located in the same physical space with room for expansion, an important consideration in light of the continued growth in processing demand. This hardware represented a major technology upgrade that included space for additional expansion to address over-all capacity demand and enable resource reservation. | ||

The 2008 upgrade began a continuous resource improvement plan that includes a phased development approach for Cheaha with on-going increases in capacity and feature enhancements being brought into production via an [http://projects.uabgrid.uab.edu/cheaha open community process]. | |||

Software improvements rolled into the 2008 upgrade included grid computing services to access distributed compute resources and orchestrate jobs using the [http://www.gridway.org GridWay] meta-scheduler. An initial 10Gigabit Ethernet link establishing the UABgrid Research Network was designed to supports high speed data transfers between clusters connected to this network. | |||

=== 2009 === | |||

In 2009, annual investment funds were directed toward establishing a fully connected dual data rate Infiniband network between the compute nodes added in 2008 and laying the foundation for a research storage system with a 60TB DDN storage system accessed via the Lustre distributed file system. The Infiniband and storage fabrics were designed to support significant increases in research data sets and their associate analytical demand. | |||

=== 2010 === | |||

In 2010, UAB was awarded an NIH Small Instrumentation Grant (SIG) to further increase analytical and storage capacity. The grant funds were combined with the annual investment funds adding 576 cores (48 nodes) based on the Intel Westmere 2.66 GHz CPU, a quad data rate Infiniband fabric with 32 uplinks, an additional 120 TB of storage for the DDN fabric, and additional hardware to improve reliability. Additional improvements to the research compute platform involved extending the UAB Research Network to link the BEC and RUST data centers, adding 20TB of user and ancillary services storage | |||

=== 2012 === | |||

In 2012, UAB IT Research Computing invested in the foundation hardware to expand long term storage and virtual machine capabilities with aqcuisition of 12 Dell 720xd system, each containing 16 cores, 96GB RAM, and 36TB of storage, creating a 192 core and 432TB virtual compute and storage fabric. | |||

Additionaly hardware investment by the School of Public Health's Section on Statistical Genetics added three 384GB large memory nodes and an additional 48 cores to the QDR Infiniband fabric. | |||

=== 2013 === | |||

In 2013, UAB IT Research Computing acquired an [http://blogs.uabgrid.uab.edu/jpr/2013/03/were-going-with-openstack/ OpenStack cloud and Ceph storage software fabric] through a partnership between Dell and Inktank in order to [http://dev.uabgrid.uab.edu extend cloud computing solutions] to the researchers at UAB and enhance the interfacing capabilities for HPC. | |||

=== 2015 === | |||

UAB IT received $500,000 from the university’s Mission Support Fund for a compute cluster seed expansion of 48 teraflops. This added 936 cores across 40 nodes with 2x12 core 2.5 GHz Intel Xeon E5-2680 v3 compute nodes and FDR InfiniBand interconnect. | |||

UAB received a $500,000 grant from the Alabama Innovation Fund for a three petabyte research storage array. This funding with additional matching from UAB provided a multi-petabyte [https://en.wikipedia.org/wiki/IBM_General_Parallel_File_System GPFS] parallel file system to the cluster which went live in 2016. | |||

=== 2016 === | |||

In 2016 UAB IT Research computing received additional funding from Deans of CAS, Engineering, and Public Heath to grow the compute capacity provided by the prior year's seed funding. This added an additional compute nodes providing researchers at UAB with a 96 2x12 core (2304 cores total) 2.5 GHz Intel Xeon E5-2680 v3 compute nodes with FDR InfiniBand interconnect. Out of the 96 compute nodes, 36 nodes have 128 GB RAM, 38 nodes have 256 GB RAM, and 14 nodes have 384 GB RAM. There are also four compute nodes with the Intel Xeon Phi 7210 accelerator cards and four compute nodes with the NVIDIA K80 GPUs. More information can be found at [[Resources]]. | |||

In addition to the compute, the GPFS six petabyte file system came online. This file system, provided each user five terabyte of personal space, additional space for shared projects and a greatly expanded scratch storage all in a single file system. | |||

The 2015 and 2016 investments combined to provide a completely new core for the Cheaha cluster, allowing the retirement of earlier compute generations. | |||

== Grant and Publication Resources == | |||

The following description may prove useful in summarizing the services available via Cheaha. If you are using Cheaha for grant funded research please send information about your grant (funding source and grant number), a statement of intent for the research project and a list of the applications you are using to UAB IT Research Computing. If you are using Cheaha for exploratory research, please send a similar note on your research interest. Finally, any publications that rely on computations performed on Cheaha should include a statement acknowledging the use of UAB Research Computing facilities in your research, see the suggested example below. Please note, your acknowledgment may also need to include an addition statement acknowledging grant-funded hardware. We also ask that you send any references to publications based on your use of Cheaha compute resources. | |||

=== Description of Cheaha for Grants (short) === | |||

UAB IT Research Computing maintains high performance compute and storage resources for investigators. The Cheaha compute cluster provides over 2900 conventional Intel 64-bit x86 CPU cores and 80 accelerators (including 72 NVIDIA P100 GPUS's) interconnected via an InfiniBand network and provides 468 TFLOP/s of aggregate theoretical peak performance. A high-performance, 6.6PB raw GPFS storage on DDN SFA12KX hardware is also connected to these compute nodes via the Infiniband fabric. An additional 20TB of traditional SAN storage is also available for home directories. This general access compute fabric is available to all UAB investigators. | |||

=== Description of Cheaha for Grants (Detailed) === | |||

The Cyberinfrastructure supporting University of Alabama at Birmingham (UAB) investigators includes high performance computing clusters, storage, campus, statewide and regionally connected high-bandwidth networks, and conditioned space for hosting and operating HPC systems, research applications and network equipment. | |||

==== Cheaha HPC system ==== | |||

Cheaha is a campus HPC resource dedicated to enhancing research computing productivity at UAB. Cheaha is managed by UAB Information Technology's Research Computing group (RC) and is available to members of the UAB community in need of increased computational capacity. Cheaha supports high-performance computing (HPC) and high throughput computing (HTC) paradigms. Cheaha is composed of resources that span data centers located in the UAB IT Data Centers in the 936 Building and the RUST Computer Center. Research Computing in open collaboration with the campus research community is leading the design and development of these resources. | |||

==== Compute Resources ==== | |||

Cheaha provides users with a traditional command-line interactive environment with access to many scientific tools that can leverage its dedicated pool of local compute resources. Alternately, users of graphical applications can start a cluster desktop. The local compute pool provides access to two generations of compute hardware based on the x86 64-bit architecture. It includes 96 nodes: 2x12 core (2304 cores total) 2.5 GHz Intel Xeon E5-2680 v3 compute nodes with FDR InfiniBand interconnect. Out of the 96 compute nodes, 36 nodes have 128 GB RAM, 38 nodes have 256 GB RAM, and 14 nodes have 384 GB RAM. There are also four compute nodes with the Intel Xeon Phi 7210 accelerator cards and four compute nodes with the NVIDIA K80 GPUs. The newest generation is composed of 18 nodes: 2x14 core (504 cores total) 2.4GHz Intel Xeon E5-2680 v4 compute nodes with 256GB RAM, four NVIDIA Tesla P100 16GB GPUs per node, and EDR InfiniBand interconnect. The compute nodes combine to provide over 468 TLOP/s of dedicated computing power. | |||

In addition UAB researchers also have access to regional and national HPC resources such as Alabama Supercomputer Authority (ASA), XSEDE and Open Science Grid (OSG). | |||

==== Storage Resources ==== | |||

The compute nodes on Cheaha are backed by high-performance, 6.6PB raw GPFS storage on DDN SFA12KX hardware connected via the Infiniband fabric. An expansion of the GPFS fabric will double the capacity and is scheduled to be on-line Fall 2018. An additional 20TB of traditional SAN storage is also available for home directories. | |||

==== Network Resources ==== | |||

The UAB Research Network is currently a dedicated 40GE optical connection between the UAB Shared HPC Facility and the RUST Campus Data Center to create a multi-site facility housing the Research Computing System, which leverages the network for connecting storage and compute hosting resources. The network supports direct connection to high-bandwidth regional networks and the capability to connect data intensive research facilities directly with the high performance computing services of the Research Computing System. This network can support very high speed secure connectivity between nodes connected to it for high speed file transfer of very large data sets without the concerns of interfering with other traffic on the campus backbone, ensuring predictable latencies. In addition, the network also consist of a secure Science DMZ with data transfer nodes (DTNs), Perfsonar measurement nodes, and a Bro security node connected directly to the border router that provide a "friction-free" pathway to access external data repositories as well as computational resources. | |||

The campus network backbone is based on a 40 gigabit redundant Ethernet network with 480 gigabit/second back-planes on the core L2/L3 Switch/Routers. For efficient management, a collapsed backbone design is used. Each campus building is connected using 10 Gigabit Ethernet links over single mode optical fiber. Desktops are connected at 1 gigabits/second speed. The campus wireless network blankets classrooms, common areas and most academic office buildings. | |||

UAB connects to the Internet2 high-speed research network via the University of Alabama System Regional Optical Network (UASRON), a University of Alabama System owned and operated DWDM Network offering 100Gbps Ethernet to the Southern Light Rail (SLR)/Southern Crossroads (SoX) in Atlanta, Ga. The UASRON also connects UAB to UA, and UAH, the other two University of Alabama System institutions, and the Alabama Supercomputer Center. UAB is also connected to other universities and schools through Alabama Research and Education Network (AREN). | |||

==== Personnel ==== | |||

UAB IT Research Computing currently maintains a support staff of 10 lead by the Assistant Vice President for Research Computing and includes an HPC Architect-Manager, four Software developers, two Scientists, a system administrator and a project coordinator. | |||

=== | === Acknowledgment in Publications === | ||

This work was supported in part by the National Science Foundation under Grants Nos. OAC-1541310, the University of Alabama at Birmingham, and the Alabama Innovation Fund. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation or the University of Alabama at Birmingham. | |||

== System Profile == | == System Profile == | ||

=== Performance === | |||

{{CheahaTflops}} | |||

=== Hardware === | === Hardware === | ||

Cheaha | The Cheaha Compute Platform includes three generations of commodity compute hardware, totaling 868 compute cores, 2.8TB of RAM, and over 200TB of storage. | ||

The hardware is grouped into generations designated gen1, gen2, and gen3 (oldest to newest). The following descriptions highlight the hardware profile for each generation. | |||

* Generation 1 (gen1) -- 64 2-CPU AMD 1.6 GHz compute nodes with Gigabit interconnect. This is the original hardware collection purchased with NSF EPSCoR funds in 2005, approx $150K. These nodes are sometimes called the "Verari" nodes. These nodes are tagged as "verari-compute-#-#" in the ROCKS naming convention. | |||

* Generation 2 (gen2) -- 24 2x4 core (196 cores total) Intel 3.0 GHz Intel compute nodes with dual data rate Infiniband interconnect and the initial high-perf storage implementation using 60TB DDN. This is the hardware collection purchased exclusively with the annual VPIT funds allocation, approx $150K/yr for the 2008 and 2009 fiscal years. These nodes are sometimes confusingly called "cheaha2" or "cheaha" nodes. These nodes are tagged as "cheaha-compute-#-#" in the ROCKS naming convention. | |||

* Generation 3 (gen3) -- 48 2x6 core (576 cores total) 2.66 GHz Intel compute nodes with quad data rate Infiniband, ScaleMP, and the high-perf storage build-out for capacity and redundancy with 120TB DDN. This is the hardware collection purchased with a combination of the NIH SIG funds and some of the 2010 annual VPIT investment. These nodes were given the code name "sipsey" and tagged as such in the node naming for the queue system. These nodes are tagged as "sipsey-compute-#-#" in the ROCKS naming convention. 16 of the gen3 nodes (sipsey-compute-0-1 thru sipsey-compute-0-16) were upgraded in 2014 from 48GB to 96GB of memory per node. | |||

* Generation 4 (gen4) -- 3 16 core (48 cores total) compute nodes. This hardware collection purchase by [http://www.soph.uab.edu/ssg/people/tiwari Dr. Hemant Tiwari of SSG]. These nodes were given the code name "ssg" and tagged as such in the node naming for the queue system. These nodes are tagged as "ssg-compute-0-#" in the ROCKS naming convention. | |||

* Generation 6 (gen6) -- | |||

** 44 Compute Nodes with two 12 core processors (Intel Xeon E5-2680 v3 2.5GHz) with 128GB DDR4 RAM, FDR InfiniBand and 10GigE network cards (4 nodes with NVIDIA K80 GPUs and 4 nodes with Intel Xeon Phi 7120P accelerators) | |||

** 38 Compute Nodes with two 12 core processors (Intel Xeon E5-2680 v3 2.5GHz) with 256GB DDR4 RAM, FDR InfiniBand and 10GigE network cards | |||

** 14 Compute Nodes with two 12 core processors (Intel Xeon E5-2680 v3 2.5GHz) with 384GB DDR4 RAM, FDR InfiniBand and 10GigE network card | |||

** FDR InfiniBand Switch | |||

** 10Gigabit Ethernet Switch | |||

** Management node and gigabit switch for cluster management | |||

** Bright Advanced Cluster Management software licenses | |||

Summarized, Cheaha's compute pool includes: | |||

* gen4 is 48 cores of [http://ark.intel.com/products/64583/Intel-Xeon-Processor-E5-2680-20M-Cache-2_70-GHz-8_00-GTs-Intel-QPI 2.70GHz eight-core Intel Xeon E5-2680 processors] with 24G of RAM per core or 384GB total | |||

* gen3 is 192 cores of [http://ark.intel.com/products/47922/Intel-Xeon-Processor-X5650-12M-Cache-2_66-GHz-6_40-GTs-Intel-QPI?q=x5650 2.67GHz six-core Intel Xeon X5650 processors] with 8Gb RAM per core or 96GB total | |||

* gen3 is 384 cores of [http://ark.intel.com/products/47922/Intel-Xeon-Processor-X5650-12M-Cache-2_66-GHz-6_40-GTs-Intel-QPI?q=x5650 2.67GHz six-core Intel Xeon X5650 processors] with 4Gb RAM per core or 48GB total | |||

* gen2 is 192 cores of [http://ark.intel.com/products/33083/Intel-Xeon-Processor-E5450-12M-Cache-3_00-GHz-1333-MHz-FSB 3.0GHz quad-core Intel Xeon E5450 processors] with 2Gb RAM per core | |||

* gen1 is 100 cores of 1.6GhZ AMD Opteron 242 processors with 1Gb RAM per core | |||

{|border="1" cellpadding="2" cellspacing="0" | |||

|+ Physical Nodes | |||

|- bgcolor=grey | |||

!gen!!queue!!#nodes!!cores/node!!RAM/node | |||

|- | |||

|gen6|| default || 44 || 24 || 128G | |||

|- | |||

|gen6|| default || 38 || 24 || 256G | |||

|- | |||

|gen6|| default || 14 || 24 || 384G | |||

|- | |||

|gen5||Ceph/OpenStack|| 12 || 20 || 96G | |||

|- | |||

|gen4||ssg||3||16||385G | |||

|- | |||

|gen3||sipsey||16||12||96G | |||

|- | |||

|gen3||sipsey||32||12||48G | |||

|- | |||

|gen2||cheaha||24||8||16G | |||

|} | |||

=== Software === | === Software === | ||

Details of the software available on Cheaha can be found on the [ | Details of the software available on Cheaha can be found on the [https://docs.uabgrid.uab.edu/wiki/Cheaha_Software Installed software page], an overview follows. | ||

Cheaha uses [http://modules.sourceforge.net/ Environment Modules] to support account configuration. Please follow these [http://me.eng.uab.edu/wiki/index.php?title=Cheaha#Environment_Modules specific steps for using environment modules]. | Cheaha uses [http://modules.sourceforge.net/ Environment Modules] to support account configuration. Please follow these [http://me.eng.uab.edu/wiki/index.php?title=Cheaha#Environment_Modules specific steps for using environment modules]. | ||

Cheaha's software stack is built with the [http://www. | Cheaha's software stack is built with the [http://www.brightcomputing.com Bright Cluster Manager]. Cheaha's operating system is CentOS with the following major cluster components: | ||

* | * BrightCM 7.2 | ||

* | * CentOS 7.2 x86_64 | ||

* [ | * [[Slurm]] 15.08 | ||

A summary of the available computational software and tools available includes: | A brief summary of the some of the available computational software and tools available includes: | ||

* Amber | * Amber | ||

* FFTW | * FFTW | ||

| Line 60: | Line 179: | ||

* R | * R | ||

* OpenMPI | * OpenMPI | ||

* MATLAB | |||

=== Network === | === Network === | ||

Cheaha is connected to the UAB Research Network which provides a dedicated 10Gbs networking backplane between clusters located the | Cheaha is connected to the UAB Research Network which provides a dedicated 10Gbs networking backplane between clusters located in the 936 data center and the campus network core. Data transfers rates of almost 8Gbps between these hosts have been demonstrated using Grid FTP, a multi-channel file transfer service that is used to move data between clusters as part of the job management operations. This performance promises very efficient job management and the seamless integration of other clusters as connectivity to the research network is expanded. | ||

== | === Benchmarks === | ||

The continuous resource improvement process involves collecting benchmarks of the system. One of the measures of greatest interest to users of the system are benchmarks of specific application codes. The following benchmarks have been performed on the system and will be further expanded as additional benchmarks are performed. | |||

* [[Cheaha-BGL_Comparison|Cheaha-BGL Comparison]] | |||

* [[Gromacs_Benchmark|Gromacs]] | |||

* [[NAMD_Benchmarks|NAMD]] | |||

=== | === Cluster Usage Statistics === | ||

Cheaha uses Bright Cluster Manager to report cluster performance data. This information provides a helpful overview of the current and historical operating stats for Cheaha. You can access the status monitoring page [https://cheaha-master01.rc.uab.edu/userportal/ here] (accessible only on the UAB network or through VPN). | |||

== Availability == | |||

Cheaha is a general-purpose computer resource made available to the UAB community by UAB IT. As such, it is available for legitimate research and educational needs and is governed by [http://www.uabgrid.uab.edu/aup UAB's Acceptable Use Policy (AUP)] for computer resources. | |||

Many software packages commonly used across UAB are available via Cheaha. | |||

To request access to Cheaha, please send a request to [mailto:support@listserv.uab.edu send a request] to the cluster support group. | |||

Cheaha's intended use implies broad access to the community, however, no guarantees are made that specific computational resources will be available to all users. Availability guarantees can only be made for reserved resources. | |||

=== Secure Shell Access === | |||

Please configure you client secure shell software to use the official host name to access Cheaha: | |||

<pre> | <pre> | ||

cheaha.rc.uab.edu | |||

</pre> | </pre> | ||

== Scheduling Framework == | == Scheduling Framework == | ||

[http://slurm.schedmd.com/ Slurm] is a queue management system and stands for Simple Linux Utility for Resource Management. Slurm was developed at the Lawrence Livermore National Lab and currently runs some of the largest compute clusters in the world. '''[[Slurm]]''' is now the primary job manager on Cheaha, it replaces SUN Grid Engine (SGE) the job manager used earlier. | |||

Slurm is similar in many ways to GridEngine or most other queue systems. You write a batch script then submit it to the queue manager (scheduler). The queue manager then schedules your job to run on the queue (or '''partition''' in Slurm parlance) that you designate. Below we will provide an outline of how to submit jobs to Slurm, how Slurm decides when to schedule your job, and how to monitor progress. | |||

== | == Support == | ||

Cheaha | Operational support for Cheaha is provided by the Research Computing group in UAB IT. For questions regarding the operational status of Cheaha please send your request to [mailto:support@listserv.uab.edu support@listserv.uab.edu]. As a user of Cheaha you will automatically by subscribed to the hpc-announce email list. This subscription is mandatory for all users of Cheaha. It is our way of communicating important information regarding Cheaha to you. The traffic on this list is restricted to official communication and has a very low volume. | ||

We have limited capacity, however, to support non-operational issue like "How do I write a job script" or "How do I compile a program". For such requests, you may find it more fruitful to send your questions to the hpc-users email list and request help from our peers in the HPC community at UAB. As with all mailing lists, please observe [http://lifehacker.com/5473859/basic-etiquette-for-email-lists-and-forums common mailing etiquette]. | |||

Finally, please remember that as you learned about HPC from others it becomes part of your responsibilty to help others on their quest. You should update this documentation or respond to mailing list requests of others. | |||

You can subscribe to hpc-users by sending an email to: | |||

[mailto:sympa@vo.uabgrid.uab.edu?subject=subscribe%20hpc-users sympa@vo.uabgrid.uab.edu with the subject ''subscribe hpc-users'']. | |||

You can unsubribe from hpc-users by sending an email to: | |||

= | [mailto:sympa@vo.uabgrid.uab.edu?subject=unsubscribe%20hpc-users sympa@vo.uabgrid.uab.edu with the subject ''unsubscribe hpc-users'']. | ||

You can review archives of the list in the [http://vo.uabgrid.uab.edu/sympa/arc/hpc-users web hpc-archives]. | |||

If you need help using the list service please send an email to: | |||

[mailto:sympa@vo.uabgrid.uab.edu?subject=help sympa@vo.uabgrid.uab.edu with the subject ''help''] | |||

If you have questions about the operation of the list itself, please send an email to the owners of the list: | |||

[mailto:hpc-users-request@vo.uabgrid.uab.edu sympa@vo.uabgrid.uab.edu with a subject relavent to your issue with the list] | |||

If you are interested in contributing to the enhancement of HPC features at UAB or would like to talk to other cluster administrators, [mailto:sympa@vo.uabgrid.uab.edu?subject=subscribe%20hpc-dev please join the hpc developers community at UAB]. | |||

Revision as of 19:38, 11 February 2019

|

HPC Web Portal now in Beta The new HPC web portal is now available. We encourage you to try it out as an alternative to traditional clients. It provides file, shell, and desktop access to the cluster within your web browser. |

Cheaha is a campus resource dedicated to enhancing research computing productivity at UAB. Cheaha is managed by UAB Information Technology's Research Computing group (UAB ITRC) and is available to members of the UAB community in need of increased computational capacity. Cheaha supports high-performance computing (HPC) and high throughput computing (HTC) paradigms.

Cheaha provides users with a traditional command-line interactive environment with access to many scientific tools that can leverage its dedicated pool of local compute resources. Alternately, users of graphical applications can start a cluster desktop. The local compute pool provides access to compute hardware based on the x86-64 64-bit architecture. The compute resources are organized into a unified Research Computing System. The compute fabric for this system is anchored by the Cheaha cluster, a commodity cluster with approximately 2400 cores connected by low-latency Fourteen Data Rate (FDR) InfiniBand networks. The compute nodes are backed by 6.6PB raw GPFS storage on DDN SFA12KX hardware, an additional 20TB available for home directories on a traditional Hitachi SAN, and other ancillary services. The compute nodes combine to provide over 110TFlops of dedicated computing power.

Cheaha is composed of resources that span data centers located in the UAB Shared Computing facility UAB 936 Building and the RUST Computer Center. Resource design and development is lead by UAB IT Research Computing in open collaboration with community members. Operational support is provided by UAB IT's Research Computing group.

Cheaha is named in honor of Cheaha Mountain, the highest peak in the state of Alabama. Cheaha is a popular destination whose summit offers clear vistas of the surrounding landscape. (Cheaha Mountain photo-streams on Flikr and Picasa).

Using

Getting Started

For information on getting an account, logging in, and running a job, please see Getting Started.

History

2005

In 2002 UAB was awarded an infrastructure development grant through the NSF EPsCoR program. This led to the 2005 acquisition of a 64 node compute cluster with two AMD Opteron 242 1.6Ghz CPUs per node (128 total cores). This cluster was named Cheaha. Cheaha expanded the compute capacity available at UAB and was the first general-access resource for the community. It lead to expanded roles for UAB IT in research computing support through the development of the UAB Shared HPC Facility in BEC and provided further engagement in Globus-based grid computing resource development on campus via UABgrid and regionally via SURAgrid.

2008

In 2008, money was allocated by UAB IT for hardware upgrades which lead to the acquisition of an additional 192 cores based on a Dell clustering solution with Intel Quad-Core E5450 3.0Ghz CPU in August of 2008. This uprade migrated Cheaha's core infrastructure to the Dell blade clustering solution. It provided a 3 fold increase in processor density over the original hardware and enables more computing power to be located in the same physical space with room for expansion, an important consideration in light of the continued growth in processing demand. This hardware represented a major technology upgrade that included space for additional expansion to address over-all capacity demand and enable resource reservation.

The 2008 upgrade began a continuous resource improvement plan that includes a phased development approach for Cheaha with on-going increases in capacity and feature enhancements being brought into production via an open community process.

Software improvements rolled into the 2008 upgrade included grid computing services to access distributed compute resources and orchestrate jobs using the GridWay meta-scheduler. An initial 10Gigabit Ethernet link establishing the UABgrid Research Network was designed to supports high speed data transfers between clusters connected to this network.

2009

In 2009, annual investment funds were directed toward establishing a fully connected dual data rate Infiniband network between the compute nodes added in 2008 and laying the foundation for a research storage system with a 60TB DDN storage system accessed via the Lustre distributed file system. The Infiniband and storage fabrics were designed to support significant increases in research data sets and their associate analytical demand.

2010

In 2010, UAB was awarded an NIH Small Instrumentation Grant (SIG) to further increase analytical and storage capacity. The grant funds were combined with the annual investment funds adding 576 cores (48 nodes) based on the Intel Westmere 2.66 GHz CPU, a quad data rate Infiniband fabric with 32 uplinks, an additional 120 TB of storage for the DDN fabric, and additional hardware to improve reliability. Additional improvements to the research compute platform involved extending the UAB Research Network to link the BEC and RUST data centers, adding 20TB of user and ancillary services storage

2012

In 2012, UAB IT Research Computing invested in the foundation hardware to expand long term storage and virtual machine capabilities with aqcuisition of 12 Dell 720xd system, each containing 16 cores, 96GB RAM, and 36TB of storage, creating a 192 core and 432TB virtual compute and storage fabric.

Additionaly hardware investment by the School of Public Health's Section on Statistical Genetics added three 384GB large memory nodes and an additional 48 cores to the QDR Infiniband fabric.

2013

In 2013, UAB IT Research Computing acquired an OpenStack cloud and Ceph storage software fabric through a partnership between Dell and Inktank in order to extend cloud computing solutions to the researchers at UAB and enhance the interfacing capabilities for HPC.

2015

UAB IT received $500,000 from the university’s Mission Support Fund for a compute cluster seed expansion of 48 teraflops. This added 936 cores across 40 nodes with 2x12 core 2.5 GHz Intel Xeon E5-2680 v3 compute nodes and FDR InfiniBand interconnect.

UAB received a $500,000 grant from the Alabama Innovation Fund for a three petabyte research storage array. This funding with additional matching from UAB provided a multi-petabyte GPFS parallel file system to the cluster which went live in 2016.

2016

In 2016 UAB IT Research computing received additional funding from Deans of CAS, Engineering, and Public Heath to grow the compute capacity provided by the prior year's seed funding. This added an additional compute nodes providing researchers at UAB with a 96 2x12 core (2304 cores total) 2.5 GHz Intel Xeon E5-2680 v3 compute nodes with FDR InfiniBand interconnect. Out of the 96 compute nodes, 36 nodes have 128 GB RAM, 38 nodes have 256 GB RAM, and 14 nodes have 384 GB RAM. There are also four compute nodes with the Intel Xeon Phi 7210 accelerator cards and four compute nodes with the NVIDIA K80 GPUs. More information can be found at Resources.

In addition to the compute, the GPFS six petabyte file system came online. This file system, provided each user five terabyte of personal space, additional space for shared projects and a greatly expanded scratch storage all in a single file system.

The 2015 and 2016 investments combined to provide a completely new core for the Cheaha cluster, allowing the retirement of earlier compute generations.

Grant and Publication Resources

The following description may prove useful in summarizing the services available via Cheaha. If you are using Cheaha for grant funded research please send information about your grant (funding source and grant number), a statement of intent for the research project and a list of the applications you are using to UAB IT Research Computing. If you are using Cheaha for exploratory research, please send a similar note on your research interest. Finally, any publications that rely on computations performed on Cheaha should include a statement acknowledging the use of UAB Research Computing facilities in your research, see the suggested example below. Please note, your acknowledgment may also need to include an addition statement acknowledging grant-funded hardware. We also ask that you send any references to publications based on your use of Cheaha compute resources.

Description of Cheaha for Grants (short)

UAB IT Research Computing maintains high performance compute and storage resources for investigators. The Cheaha compute cluster provides over 2900 conventional Intel 64-bit x86 CPU cores and 80 accelerators (including 72 NVIDIA P100 GPUS's) interconnected via an InfiniBand network and provides 468 TFLOP/s of aggregate theoretical peak performance. A high-performance, 6.6PB raw GPFS storage on DDN SFA12KX hardware is also connected to these compute nodes via the Infiniband fabric. An additional 20TB of traditional SAN storage is also available for home directories. This general access compute fabric is available to all UAB investigators.

Description of Cheaha for Grants (Detailed)

The Cyberinfrastructure supporting University of Alabama at Birmingham (UAB) investigators includes high performance computing clusters, storage, campus, statewide and regionally connected high-bandwidth networks, and conditioned space for hosting and operating HPC systems, research applications and network equipment.

Cheaha HPC system

Cheaha is a campus HPC resource dedicated to enhancing research computing productivity at UAB. Cheaha is managed by UAB Information Technology's Research Computing group (RC) and is available to members of the UAB community in need of increased computational capacity. Cheaha supports high-performance computing (HPC) and high throughput computing (HTC) paradigms. Cheaha is composed of resources that span data centers located in the UAB IT Data Centers in the 936 Building and the RUST Computer Center. Research Computing in open collaboration with the campus research community is leading the design and development of these resources.

Compute Resources

Cheaha provides users with a traditional command-line interactive environment with access to many scientific tools that can leverage its dedicated pool of local compute resources. Alternately, users of graphical applications can start a cluster desktop. The local compute pool provides access to two generations of compute hardware based on the x86 64-bit architecture. It includes 96 nodes: 2x12 core (2304 cores total) 2.5 GHz Intel Xeon E5-2680 v3 compute nodes with FDR InfiniBand interconnect. Out of the 96 compute nodes, 36 nodes have 128 GB RAM, 38 nodes have 256 GB RAM, and 14 nodes have 384 GB RAM. There are also four compute nodes with the Intel Xeon Phi 7210 accelerator cards and four compute nodes with the NVIDIA K80 GPUs. The newest generation is composed of 18 nodes: 2x14 core (504 cores total) 2.4GHz Intel Xeon E5-2680 v4 compute nodes with 256GB RAM, four NVIDIA Tesla P100 16GB GPUs per node, and EDR InfiniBand interconnect. The compute nodes combine to provide over 468 TLOP/s of dedicated computing power. In addition UAB researchers also have access to regional and national HPC resources such as Alabama Supercomputer Authority (ASA), XSEDE and Open Science Grid (OSG).

Storage Resources

The compute nodes on Cheaha are backed by high-performance, 6.6PB raw GPFS storage on DDN SFA12KX hardware connected via the Infiniband fabric. An expansion of the GPFS fabric will double the capacity and is scheduled to be on-line Fall 2018. An additional 20TB of traditional SAN storage is also available for home directories.

Network Resources

The UAB Research Network is currently a dedicated 40GE optical connection between the UAB Shared HPC Facility and the RUST Campus Data Center to create a multi-site facility housing the Research Computing System, which leverages the network for connecting storage and compute hosting resources. The network supports direct connection to high-bandwidth regional networks and the capability to connect data intensive research facilities directly with the high performance computing services of the Research Computing System. This network can support very high speed secure connectivity between nodes connected to it for high speed file transfer of very large data sets without the concerns of interfering with other traffic on the campus backbone, ensuring predictable latencies. In addition, the network also consist of a secure Science DMZ with data transfer nodes (DTNs), Perfsonar measurement nodes, and a Bro security node connected directly to the border router that provide a "friction-free" pathway to access external data repositories as well as computational resources.

The campus network backbone is based on a 40 gigabit redundant Ethernet network with 480 gigabit/second back-planes on the core L2/L3 Switch/Routers. For efficient management, a collapsed backbone design is used. Each campus building is connected using 10 Gigabit Ethernet links over single mode optical fiber. Desktops are connected at 1 gigabits/second speed. The campus wireless network blankets classrooms, common areas and most academic office buildings.

UAB connects to the Internet2 high-speed research network via the University of Alabama System Regional Optical Network (UASRON), a University of Alabama System owned and operated DWDM Network offering 100Gbps Ethernet to the Southern Light Rail (SLR)/Southern Crossroads (SoX) in Atlanta, Ga. The UASRON also connects UAB to UA, and UAH, the other two University of Alabama System institutions, and the Alabama Supercomputer Center. UAB is also connected to other universities and schools through Alabama Research and Education Network (AREN).

Personnel

UAB IT Research Computing currently maintains a support staff of 10 lead by the Assistant Vice President for Research Computing and includes an HPC Architect-Manager, four Software developers, two Scientists, a system administrator and a project coordinator.

Acknowledgment in Publications

This work was supported in part by the National Science Foundation under Grants Nos. OAC-1541310, the University of Alabama at Birmingham, and the Alabama Innovation Fund. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation or the University of Alabama at Birmingham.

System Profile

Performance

| Generation | Type | Nodes | CPUs per Node | Cores Per CPU | Total Cores | Clock Speed (GHz) | Instructions Per Cycle | Hardware Reference |

|---|---|---|---|---|---|---|---|---|

| Gen 6† | Intel Xeon E5-2680 v3 | 96 | 2 | 12 | 2304 | 2.50 | 16 | Intel Xeon E5-2680 v3 |

| Gen 7†† | Intel Xeon E5-2680 v4 | 18 | 2 | 14 | 504 | 2.40 | 16 | Intel Xeon E5-2680 v4 |

| Gen 8 | Intel Xeon E5-2680 v4 | 35 | 2 | 12 | 840 | 2.50 | 16 | Intel Xeon E5-2680 v3 |

| Gen 9 | Intel Xeon Gold 6248R | 52 | 2 | 24 | 2496 | 3.0 | 16 | 3.0GHz Intel Xeon Gold 6248R |

| Generation | Theoretical Peak Tera-FLOPS |

|---|---|

| Gen 6† | 110 |

| Gen 7†† | 358 |

| Gen 8 | TBD |

| Gen 9 | TBD |

† Includes four Intel Xeon Phi 7210 accelerators and four NVIDIA K80 GPUs.

†† Includes 72 NVIDIA Tesla P100 16GB GPUs.

Hardware

The Cheaha Compute Platform includes three generations of commodity compute hardware, totaling 868 compute cores, 2.8TB of RAM, and over 200TB of storage.

The hardware is grouped into generations designated gen1, gen2, and gen3 (oldest to newest). The following descriptions highlight the hardware profile for each generation.

- Generation 1 (gen1) -- 64 2-CPU AMD 1.6 GHz compute nodes with Gigabit interconnect. This is the original hardware collection purchased with NSF EPSCoR funds in 2005, approx $150K. These nodes are sometimes called the "Verari" nodes. These nodes are tagged as "verari-compute-#-#" in the ROCKS naming convention.

- Generation 2 (gen2) -- 24 2x4 core (196 cores total) Intel 3.0 GHz Intel compute nodes with dual data rate Infiniband interconnect and the initial high-perf storage implementation using 60TB DDN. This is the hardware collection purchased exclusively with the annual VPIT funds allocation, approx $150K/yr for the 2008 and 2009 fiscal years. These nodes are sometimes confusingly called "cheaha2" or "cheaha" nodes. These nodes are tagged as "cheaha-compute-#-#" in the ROCKS naming convention.

- Generation 3 (gen3) -- 48 2x6 core (576 cores total) 2.66 GHz Intel compute nodes with quad data rate Infiniband, ScaleMP, and the high-perf storage build-out for capacity and redundancy with 120TB DDN. This is the hardware collection purchased with a combination of the NIH SIG funds and some of the 2010 annual VPIT investment. These nodes were given the code name "sipsey" and tagged as such in the node naming for the queue system. These nodes are tagged as "sipsey-compute-#-#" in the ROCKS naming convention. 16 of the gen3 nodes (sipsey-compute-0-1 thru sipsey-compute-0-16) were upgraded in 2014 from 48GB to 96GB of memory per node.

- Generation 4 (gen4) -- 3 16 core (48 cores total) compute nodes. This hardware collection purchase by Dr. Hemant Tiwari of SSG. These nodes were given the code name "ssg" and tagged as such in the node naming for the queue system. These nodes are tagged as "ssg-compute-0-#" in the ROCKS naming convention.

- Generation 6 (gen6) --

- 44 Compute Nodes with two 12 core processors (Intel Xeon E5-2680 v3 2.5GHz) with 128GB DDR4 RAM, FDR InfiniBand and 10GigE network cards (4 nodes with NVIDIA K80 GPUs and 4 nodes with Intel Xeon Phi 7120P accelerators)

- 38 Compute Nodes with two 12 core processors (Intel Xeon E5-2680 v3 2.5GHz) with 256GB DDR4 RAM, FDR InfiniBand and 10GigE network cards

- 14 Compute Nodes with two 12 core processors (Intel Xeon E5-2680 v3 2.5GHz) with 384GB DDR4 RAM, FDR InfiniBand and 10GigE network card

- FDR InfiniBand Switch

- 10Gigabit Ethernet Switch

- Management node and gigabit switch for cluster management

- Bright Advanced Cluster Management software licenses

Summarized, Cheaha's compute pool includes:

- gen4 is 48 cores of 2.70GHz eight-core Intel Xeon E5-2680 processors with 24G of RAM per core or 384GB total

- gen3 is 192 cores of 2.67GHz six-core Intel Xeon X5650 processors with 8Gb RAM per core or 96GB total

- gen3 is 384 cores of 2.67GHz six-core Intel Xeon X5650 processors with 4Gb RAM per core or 48GB total

- gen2 is 192 cores of 3.0GHz quad-core Intel Xeon E5450 processors with 2Gb RAM per core

- gen1 is 100 cores of 1.6GhZ AMD Opteron 242 processors with 1Gb RAM per core

| gen | queue | #nodes | cores/node | RAM/node |

|---|---|---|---|---|

| gen6 | default | 44 | 24 | 128G |

| gen6 | default | 38 | 24 | 256G |

| gen6 | default | 14 | 24 | 384G |

| gen5 | Ceph/OpenStack | 12 | 20 | 96G |

| gen4 | ssg | 3 | 16 | 385G |

| gen3 | sipsey | 16 | 12 | 96G |

| gen3 | sipsey | 32 | 12 | 48G |

| gen2 | cheaha | 24 | 8 | 16G |

Software

Details of the software available on Cheaha can be found on the Installed software page, an overview follows.

Cheaha uses Environment Modules to support account configuration. Please follow these specific steps for using environment modules.

Cheaha's software stack is built with the Bright Cluster Manager. Cheaha's operating system is CentOS with the following major cluster components:

- BrightCM 7.2

- CentOS 7.2 x86_64

- Slurm 15.08

A brief summary of the some of the available computational software and tools available includes:

- Amber

- FFTW

- Gromacs

- GSL

- NAMD

- VMD

- Intel Compilers

- GNU Compilers

- Java

- R

- OpenMPI

- MATLAB

Network

Cheaha is connected to the UAB Research Network which provides a dedicated 10Gbs networking backplane between clusters located in the 936 data center and the campus network core. Data transfers rates of almost 8Gbps between these hosts have been demonstrated using Grid FTP, a multi-channel file transfer service that is used to move data between clusters as part of the job management operations. This performance promises very efficient job management and the seamless integration of other clusters as connectivity to the research network is expanded.

Benchmarks

The continuous resource improvement process involves collecting benchmarks of the system. One of the measures of greatest interest to users of the system are benchmarks of specific application codes. The following benchmarks have been performed on the system and will be further expanded as additional benchmarks are performed.

Cluster Usage Statistics

Cheaha uses Bright Cluster Manager to report cluster performance data. This information provides a helpful overview of the current and historical operating stats for Cheaha. You can access the status monitoring page here (accessible only on the UAB network or through VPN).

Availability

Cheaha is a general-purpose computer resource made available to the UAB community by UAB IT. As such, it is available for legitimate research and educational needs and is governed by UAB's Acceptable Use Policy (AUP) for computer resources.

Many software packages commonly used across UAB are available via Cheaha.

To request access to Cheaha, please send a request to send a request to the cluster support group.

Cheaha's intended use implies broad access to the community, however, no guarantees are made that specific computational resources will be available to all users. Availability guarantees can only be made for reserved resources.

Secure Shell Access

Please configure you client secure shell software to use the official host name to access Cheaha:

cheaha.rc.uab.edu

Scheduling Framework

Slurm is a queue management system and stands for Simple Linux Utility for Resource Management. Slurm was developed at the Lawrence Livermore National Lab and currently runs some of the largest compute clusters in the world. Slurm is now the primary job manager on Cheaha, it replaces SUN Grid Engine (SGE) the job manager used earlier.

Slurm is similar in many ways to GridEngine or most other queue systems. You write a batch script then submit it to the queue manager (scheduler). The queue manager then schedules your job to run on the queue (or partition in Slurm parlance) that you designate. Below we will provide an outline of how to submit jobs to Slurm, how Slurm decides when to schedule your job, and how to monitor progress.

Support

Operational support for Cheaha is provided by the Research Computing group in UAB IT. For questions regarding the operational status of Cheaha please send your request to support@listserv.uab.edu. As a user of Cheaha you will automatically by subscribed to the hpc-announce email list. This subscription is mandatory for all users of Cheaha. It is our way of communicating important information regarding Cheaha to you. The traffic on this list is restricted to official communication and has a very low volume.

We have limited capacity, however, to support non-operational issue like "How do I write a job script" or "How do I compile a program". For such requests, you may find it more fruitful to send your questions to the hpc-users email list and request help from our peers in the HPC community at UAB. As with all mailing lists, please observe common mailing etiquette.

Finally, please remember that as you learned about HPC from others it becomes part of your responsibilty to help others on their quest. You should update this documentation or respond to mailing list requests of others.

You can subscribe to hpc-users by sending an email to:

sympa@vo.uabgrid.uab.edu with the subject subscribe hpc-users.

You can unsubribe from hpc-users by sending an email to:

sympa@vo.uabgrid.uab.edu with the subject unsubscribe hpc-users.

You can review archives of the list in the web hpc-archives.

If you need help using the list service please send an email to:

sympa@vo.uabgrid.uab.edu with the subject help

If you have questions about the operation of the list itself, please send an email to the owners of the list:

sympa@vo.uabgrid.uab.edu with a subject relavent to your issue with the list

If you are interested in contributing to the enhancement of HPC features at UAB or would like to talk to other cluster administrators, please join the hpc developers community at UAB.