Gromacs Benchmark: Difference between revisions

(added Intro and top of page) |

(added category MD) |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

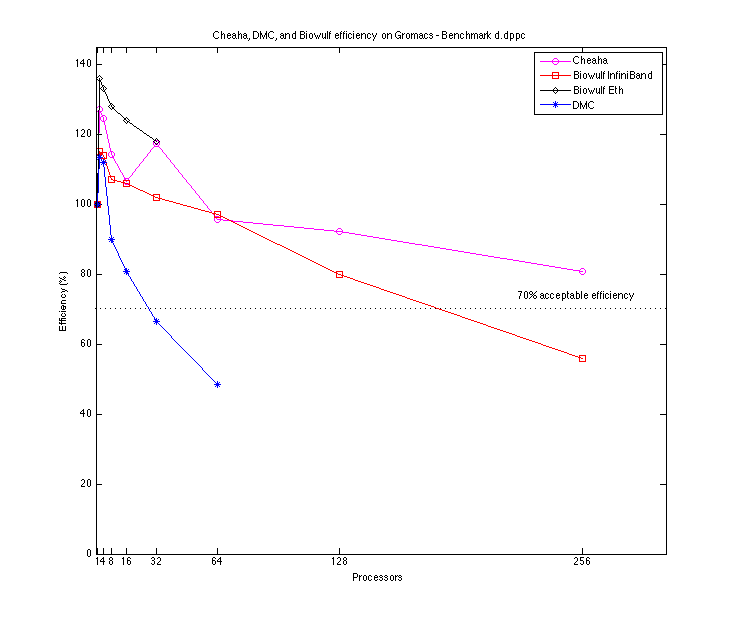

The efficiency of a parallel system describes the fraction of the time that is being used by the processors for a given computation. It is defined as | The efficiency of a parallel system describes the fraction of the time that is being used by the processors for a given computation. It is defined as | ||

<pre> | <pre> | ||

| Line 8: | Line 6: | ||

</pre> | </pre> | ||

In general, parallel jobs should scale to at least 70% efficiency. The ASC's DMC recommends a scaling efficiency of 75% or greater. For Gromacs the efficiency of a parallel job can be calculated with | In general, parallel jobs should scale to at least 70% efficiency. The ASC's DMC recommends a scaling efficiency of 75% or greater. For Gromacs the efficiency of a parallel job can be calculated with either the Wall clock parameter or the ns/day parameter. We use the wall clock and efficiency is calculated as (where N is processors committed to the job): | ||

<pre> | <pre> | ||

| Line 17: | Line 14: | ||

</pre> | </pre> | ||

Benchmark used for performance evaluation on [[Cheaha]] ,[http://biowulf.nih.gov Biowulf], and [http://www.asc.edu/supercomputing/index.shtml DMC]is the d.dppc which is available from the Gromacs benchmark suite and is available at: http://www.gromacs.org/About_Gromacs/Benchmarks | Benchmark used for performance evaluation on [[Cheaha]], [http://biowulf.nih.gov Biowulf], and [http://www.asc.edu/supercomputing/index.shtml DMC]is the d.dppc which is available from the Gromacs benchmark suite and is available at: http://www.gromacs.org/About_Gromacs/Benchmarks | ||

Information on how to submit Gromacs jobs to [[Cheaha]] is available [[Gromacs |here]]. | |||

== Sample Benchmark using Gromacs == | |||

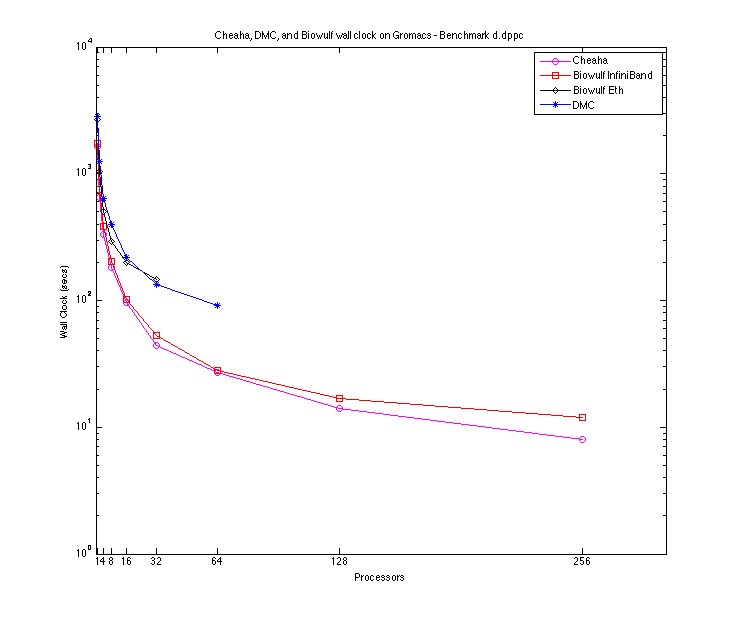

Benchmark parameters: 1024 DPPC lipids with 23 water molecules per lipid, totalling to 121856 atoms. A twin-range group based cut-off is used, 1.8 nm for electrostatics and 1.0 nm for Lennard-Jones interactions. The long-range contribution to electrostatics is updated every 10 steps. 5000 steps = 10ps. | |||

===Wall clock and efficiency on Gromacs - Benchmark d.dppc=== | |||

{| border="1" align="center" cellpadding="10" style="text-align:center;" | |||

|+ Cheaha, DMC, and Biowulf wall clock and efficiency on Gromacs - Benchmark d.dppc | |||

!Processors | |||

! Cheaha | |||

! DMC | |||

! Biowulf IB | |||

! Biowulf Ethernet | |||

|- | |||

|1 | |||

|1562 (100%) | |||

|2848 (100%) | |||

|1734 (100%) | |||

|2656 (100%) | |||

|- | |||

|2 | |||

|650 (127%) | |||

|1256 (113%) | |||

|754 (115%) | |||

|1039 (128%) | |||

|- | |||

|4 | |||

|332 (124%) | |||

|636 (112%) | |||

|382 (114%) | |||

|508 (131%) | |||

|- | |||

|8 | |||

|181 (114%) | |||

|396 (90%) | |||

|203 (107%) | |||

|294 (113%) | |||

|- | |||

|16 | |||

|97 (106%) | |||

|220 (81%) | |||

|102 (106%) | |||

|200 (83%) | |||

|- | |||

|32 | |||

|44 (117%) | |||

|134 (66%) | |||

|53 (102 %) | |||

|147 (56%) | |||

|- | |||

|64 | |||

|27 (96%) | |||

|92 (49%) | |||

|28 (97%) | |||

|NA | |||

|- | |||

|128 | |||

|14 (92 %) | |||

|NA | |||

|17 (80%) | |||

|NA | |||

|- | |||

|256 | |||

|8 (81%) | |||

|NA | |||

|12 (56%) | |||

|NA | |||

|} | |||

[[File:Gromacs_Benchmark_img1_Gromacs_wall.png|center]] | |||

[[File:Gromacs_Benckmark_img2_Gromacs_efficiency.png|center]] | |||

===ns/day (speedup)and GFlops rating on Gromacs -Benchmark d.dppc=== | |||

{| border="1" align="center" cellpadding="10" style="text-align:center;" | |||

|+ Cheaha, DMC, and Biowulf ns/day (speedup)and GFlops rating on Gromacs -Benchmark d.dppc | |||

! Processors | |||

! Cheaha ns/day(speedup) | |||

! Cheaha GFlops | |||

! DMC ns/day (speedup) | |||

! DMC GFlops | |||

! Biowulf IB ns/day (speedup) | |||

! Biowulf Ethernet ns/day(speedup) | |||

|- | |||

|1 | |||

|0.523 | |||

|2.482 | |||

|0.303 | |||

|1.428 | |||

|0.498 | |||

|0.325 | |||

|- | |||

|2 | |||

|1.329 (2.5) | |||

|6.307 | |||

|0.688 (2.2) | |||

|3.234 | |||

|1.146 (2.7) | |||

|0.832 (2.6) | |||

|- | |||

|4 | |||

|2.603 (4.9) | |||

|12.326 | |||

|1.359 (4.4) | |||

|6.381 | |||

|2.262 (5.3) | |||

|1.701 (5.2) | |||

|- | |||

|8 | |||

|4.774 (9.1) | |||

|22.613 | |||

|2.183 (7.2) | |||

|10.266 | |||

|4.257 (10.3) | |||

|2.939 (9.0) | |||

|- | |||

|16 | |||

|8.909 (17.0) | |||

|42.341 | |||

|3.922 (12.9) | |||

|18.442 | |||

|8.472 (17.0) | |||

|5.879 (18.1) | |||

|- | |||

|32 | |||

|19.64 (37.5) | |||

|93.496 | |||

|6.442 (21.2) | |||

|30.352 | |||

|16.305 (32.7) | |||

|9.933 (30.6) | |||

|- | |||

|64 | |||

|32.006 (61) | |||

|152.524 | |||

|9.432 (31.1) | |||

|44.509 | |||

|30.863 (62) | |||

|NA | |||

|- | |||

|128 | |||

|61.727 (118) | |||

|294.303 | |||

|NA | |||

|NA | |||

|50.834 (102) | |||

|NA | |||

|- | |||

|256 | |||

|108.022 (207) | |||

|516.398 | |||

|NA | |||

|NA | |||

|72.014 (144) | |||

|NA | |||

|} | |||

[[File:Gromacs_benchmark_img3_Gromacs_ns.png|center]] | |||

===Bench mark notes=== | |||

The above benchmarks were run using d.dppc benchmark [http://www.gromacs.org/About_Gromacs/Benchmarks ] from Gromacs. | |||

On Cheaha, the second and third generation hardware was used for the above benchmarks. More information about the hardware used on [[Cheaha]] is available [[Cheaha#Hardware |here]]. Cheaha is running Gromacs 4.0.7 | |||

All tests on the DMC are run on mixed generation hardware. DMC is running Gromacs 4.5.3 | |||

The data for the NIH-Biowulf benchmarks is available at: http://biowulf.nih.gov/apps/gromacs/bench-4.0.3.html. The Biowulf hardware used for comparison in the e2800 with Infiniband and o2800 with ethernet. | |||

==Other Benchmarks == | |||

These benchmarks have been performed by Mike Hanby at Research Computing after 2007 Hardware upgrade to the Cheaha. | |||

=== 2007 Hardware and Gromacs 3.x === | === 2007 Hardware and Gromacs 3.x === | ||

| Line 56: | Line 219: | ||

Performance: 76.705 5.208 1.330 18.040 | Performance: 76.705 5.208 1.330 18.040 | ||

[[Category: | [[Category:Molecular Dynamics]] | ||

Latest revision as of 15:01, 4 April 2012

The efficiency of a parallel system describes the fraction of the time that is being used by the processors for a given computation. It is defined as

Execution time using one processor ts

E(n)= -------------------------------------- = ----

N * Execution time using N processors N tn

In general, parallel jobs should scale to at least 70% efficiency. The ASC's DMC recommends a scaling efficiency of 75% or greater. For Gromacs the efficiency of a parallel job can be calculated with either the Wall clock parameter or the ns/day parameter. We use the wall clock and efficiency is calculated as (where N is processors committed to the job):

Wall Clock where N = 1

------------------------------------- * 100 = Efficiency

N * Wall Clock on N processors

Benchmark used for performance evaluation on Cheaha, Biowulf, and DMCis the d.dppc which is available from the Gromacs benchmark suite and is available at: http://www.gromacs.org/About_Gromacs/Benchmarks

Information on how to submit Gromacs jobs to Cheaha is available here.

Sample Benchmark using Gromacs

Benchmark parameters: 1024 DPPC lipids with 23 water molecules per lipid, totalling to 121856 atoms. A twin-range group based cut-off is used, 1.8 nm for electrostatics and 1.0 nm for Lennard-Jones interactions. The long-range contribution to electrostatics is updated every 10 steps. 5000 steps = 10ps.

Wall clock and efficiency on Gromacs - Benchmark d.dppc

| Processors | Cheaha | DMC | Biowulf IB | Biowulf Ethernet |

|---|---|---|---|---|

| 1 | 1562 (100%) | 2848 (100%) | 1734 (100%) | 2656 (100%) |

| 2 | 650 (127%) | 1256 (113%) | 754 (115%) | 1039 (128%) |

| 4 | 332 (124%) | 636 (112%) | 382 (114%) | 508 (131%) |

| 8 | 181 (114%) | 396 (90%) | 203 (107%) | 294 (113%) |

| 16 | 97 (106%) | 220 (81%) | 102 (106%) | 200 (83%) |

| 32 | 44 (117%) | 134 (66%) | 53 (102 %) | 147 (56%) |

| 64 | 27 (96%) | 92 (49%) | 28 (97%) | NA |

| 128 | 14 (92 %) | NA | 17 (80%) | NA |

| 256 | 8 (81%) | NA | 12 (56%) | NA |

ns/day (speedup)and GFlops rating on Gromacs -Benchmark d.dppc

| Processors | Cheaha ns/day(speedup) | Cheaha GFlops | DMC ns/day (speedup) | DMC GFlops | Biowulf IB ns/day (speedup) | Biowulf Ethernet ns/day(speedup) |

|---|---|---|---|---|---|---|

| 1 | 0.523 | 2.482 | 0.303 | 1.428 | 0.498 | 0.325 |

| 2 | 1.329 (2.5) | 6.307 | 0.688 (2.2) | 3.234 | 1.146 (2.7) | 0.832 (2.6) |

| 4 | 2.603 (4.9) | 12.326 | 1.359 (4.4) | 6.381 | 2.262 (5.3) | 1.701 (5.2) |

| 8 | 4.774 (9.1) | 22.613 | 2.183 (7.2) | 10.266 | 4.257 (10.3) | 2.939 (9.0) |

| 16 | 8.909 (17.0) | 42.341 | 3.922 (12.9) | 18.442 | 8.472 (17.0) | 5.879 (18.1) |

| 32 | 19.64 (37.5) | 93.496 | 6.442 (21.2) | 30.352 | 16.305 (32.7) | 9.933 (30.6) |

| 64 | 32.006 (61) | 152.524 | 9.432 (31.1) | 44.509 | 30.863 (62) | NA |

| 128 | 61.727 (118) | 294.303 | NA | NA | 50.834 (102) | NA |

| 256 | 108.022 (207) | 516.398 | NA | NA | 72.014 (144) | NA |

Bench mark notes

The above benchmarks were run using d.dppc benchmark [1] from Gromacs.

On Cheaha, the second and third generation hardware was used for the above benchmarks. More information about the hardware used on Cheaha is available here. Cheaha is running Gromacs 4.0.7

All tests on the DMC are run on mixed generation hardware. DMC is running Gromacs 4.5.3

The data for the NIH-Biowulf benchmarks is available at: http://biowulf.nih.gov/apps/gromacs/bench-4.0.3.html. The Biowulf hardware used for comparison in the e2800 with Infiniband and o2800 with ethernet.

Other Benchmarks

These benchmarks have been performed by Mike Hanby at Research Computing after 2007 Hardware upgrade to the Cheaha.

2007 Hardware and Gromacs 3.x

Note: The Gromacs 3.x code base was severely limited in spanning multiple compute nodes. The limit for 1GigE network fabrics was 4 nodes. The following performance data is provided for historical reference only and does not reflect performance of the Gromacs 4.x code base currently install on Cheaha.

Two identical 4 CPU Gromacs runs and the jobs spread out as follows based on current queue load (the new nodes are using Infiniband, the old TCP for message passing):

Dell Blades: 4 cpu job running on 4 compute nodes

Job ID: 71566 Submitted: 14:11:40 Completed: 17:06:03 Wall Clock: 02:54:23

NODE (s) Real (s) (%)

Time: 10462.000 10462.000 100.0

2h54:22

(Mnbf/s) (GFlops) (ns/day) (hour/ns)

Performance: 238.044 16.164 4.129 5.812

Verari: 4 cpu job running on 2 compute nodes

Job ID: 71567 Submitted: 14:11:44 Completed: 23:13:01 Wall Clock: 09:01:17

NODE (s) Real (s) (%)

Time: 32473.000 32473.000 100.0

9h01:13

(Mnbf/s) (GFlops) (ns/day) (hour/ns)

Performance: 76.705 5.208 1.330 18.040